EO Exploitation Platform Common Architecture

Master System Design Document

EOEPCA.SDD.001

COMMENTS and ISSUES |

PDF |

EUROPEAN SPACE AGENCY CONTRACT REPORT |

TELESPAZIO VEGA UK Ltd |

- AMENDMENT HISTORY

-

This document shall be amended by releasing a new edition of the document in its entirety.

The Amendment Record Sheet below records the history and issue status of this document.Table 1. Amendment Record Sheet ISSUE DATE REASON 1.1

InProgress

Updates during development of Reference Implementation

1.0

02/08/2019

Issue for domain expert ITT

0.6

18/07/2019

Added XACML description + PDF template modifications

0.5

09/07/2019

Added Client Library + Billing

0.4

21/06/2019

Added Platform API

0.3

13/05/2019

Added content for Processing & Chaining and Resource Management

0.2

25/04/2019

Re-work IAM approach

0.1

24/04/2019

Initial in-progress draft

1. Introduction

1.1. Purpose and Scope

This document presents the Master System Design for the Common Architecture.

1.2. Structure of the Document

- Section 2 - Context

-

Provides the context for Exploitation Platforms within the ecosystem of EO analysis.

- Section 3 - Design Overview

-

Provides an overview of the Common Architecture and the domain areas.

- Section 4 - User Management

-

Describes the User Management domain area.

- Section 5 - Processing and Chaining

-

Describes the Processing & Chaining domain area.

- Section 6 - Resource Management

-

Describes the Resource Management domain area.

- Section 7 - Platform API

-

Describes the Platform API, covering all domain areas.

- Section 8 - Web Portal

-

Describes the Web Portal, covering all domain areas.

1.3. Reference Documents

The following is a list of Reference Documents with a direct bearing on the content of this document.

| Reference | Document Details | Version |

|---|---|---|

EOEPCA - Use Case Analysis |

Issue 1.0, |

|

Exploitation Platform - Functional Model, |

Issue 1.0, |

|

Thematic Exploitation Platform Open Architecture, |

Issue 1, |

|

OGC Testbed-14: WPS-T Engineering Report, |

18-036r1, |

|

OGC WPS 2.0 REST/JSON Binding Extension, Draft, |

1.0-draft |

|

Common Workflow Language Specifications, |

v1.0.2 |

|

OGC Testbed-13, EP Application Package Engineering Report, |

17-023, |

|

OGC Testbed-13, Application Deployment and Execution Service Engineering Report, |

17-024, |

|

OGC Testbed-14, Application Package Engineering Report, |

18-049r1, |

|

OGC Testbed-14, ADES & EMS Results and Best Practices Engineering Report, |

18-050r1, |

|

OpenSearch GEO: OpenSearch Geo and Time Extensions, |

10-032r8, |

|

OpenSearch EO: OGC OpenSearch Extension for Earth Observation, |

13-026r9, |

|

OGC EO Dataset Metadata GeoJSON(-LD) Encoding Standard, |

17-003r1/17-084 |

|

OGC OpenSearch-EO GeoJSON(-LD) Response Encoding Standard, |

17-047 |

|

The Payment Card Industry Data Security Standard, |

v3.2.1 |

|

CEOS OpenSearch Best Practise, |

v1.2, |

|

OpenID Connect Core 1.0, |

v1.0, |

|

OGC Catalogue Services 3.0 Specification - HTTP Protocol Binding (Catalogue Services for the Web), |

v3.0, |

|

OGC Web Map Server Implementation Specification, |

v1.3.0, |

|

OGC Web Map Tile Service Implementation Standard, |

v1.0.0, |

|

OGC Web Feature Service 2.0 Interface Standard – With Corrigendum, |

v2.0.2, |

|

OGC Web Coverage Service (WCS) 2.1 Interface Standard - Core, |

v2.1, |

|

Web Coverage Processing Service (WCPS) Language Interface Standard, |

v1.0.0, |

|

Amazon Simple Storage Service REST API, |

API Version 2006-03-01 |

1.4. Terminology

The following terms are used in the Master System Design.

| Term | Meaning |

|---|---|

Admin |

User with administrative capability on the EP |

Algorithm |

A self-contained set of operations to be performed, typically to achieve a desired data manipulation. The algorithm must be implemented (codified) for deployment and execution on the platform. |

Analysis Result |

The Products produced as output of an Interactive Application analysis session. |

Analytics |

A set of activities aimed to discover, interpret and communicate meaningful patters within the data. Analytics considered here are performed manually (or in a semi-automatic way) on-line with the aid of Interactive Applications. |

Application Artefact |

The 'software' component that provides the execution unit of the Application Package. |

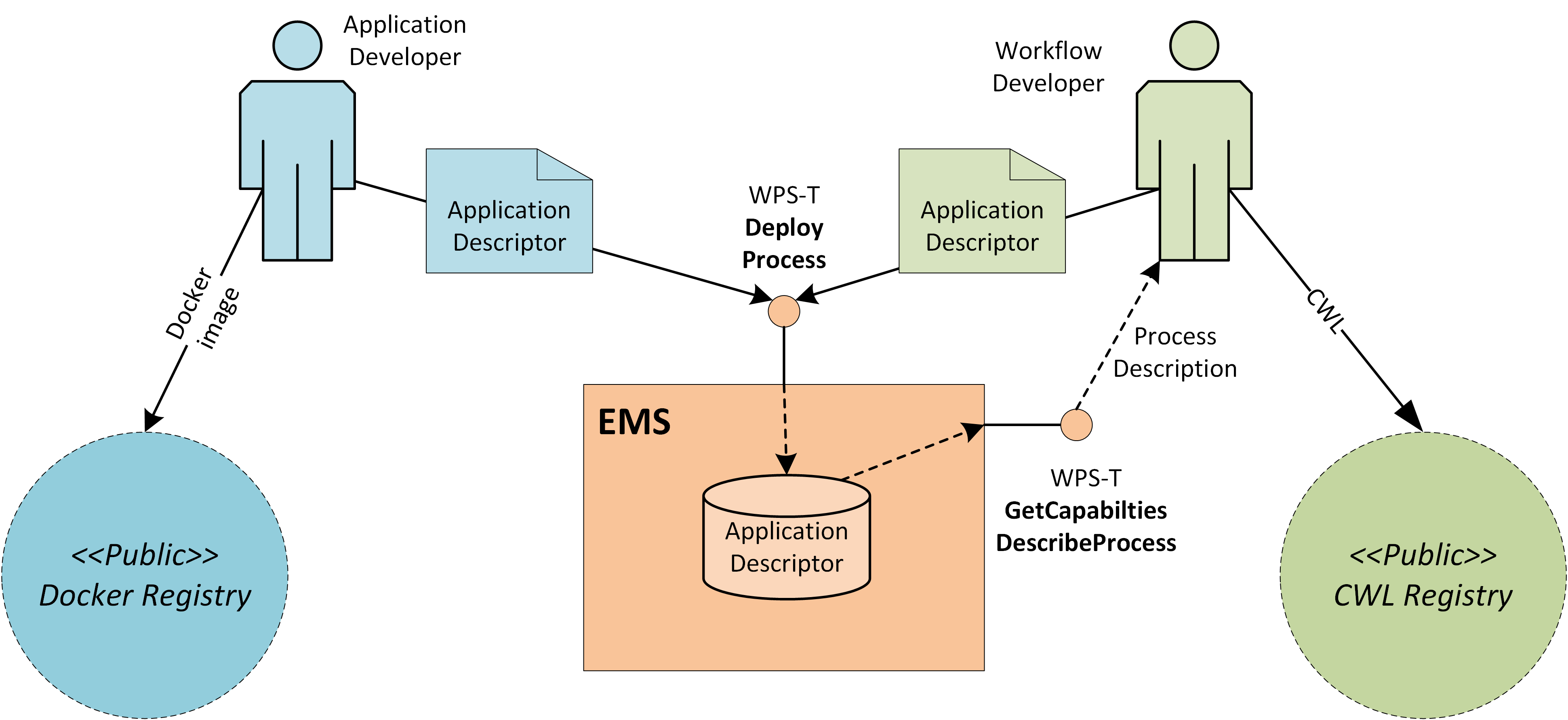

Application Deployment and Execution Service (ADES) |

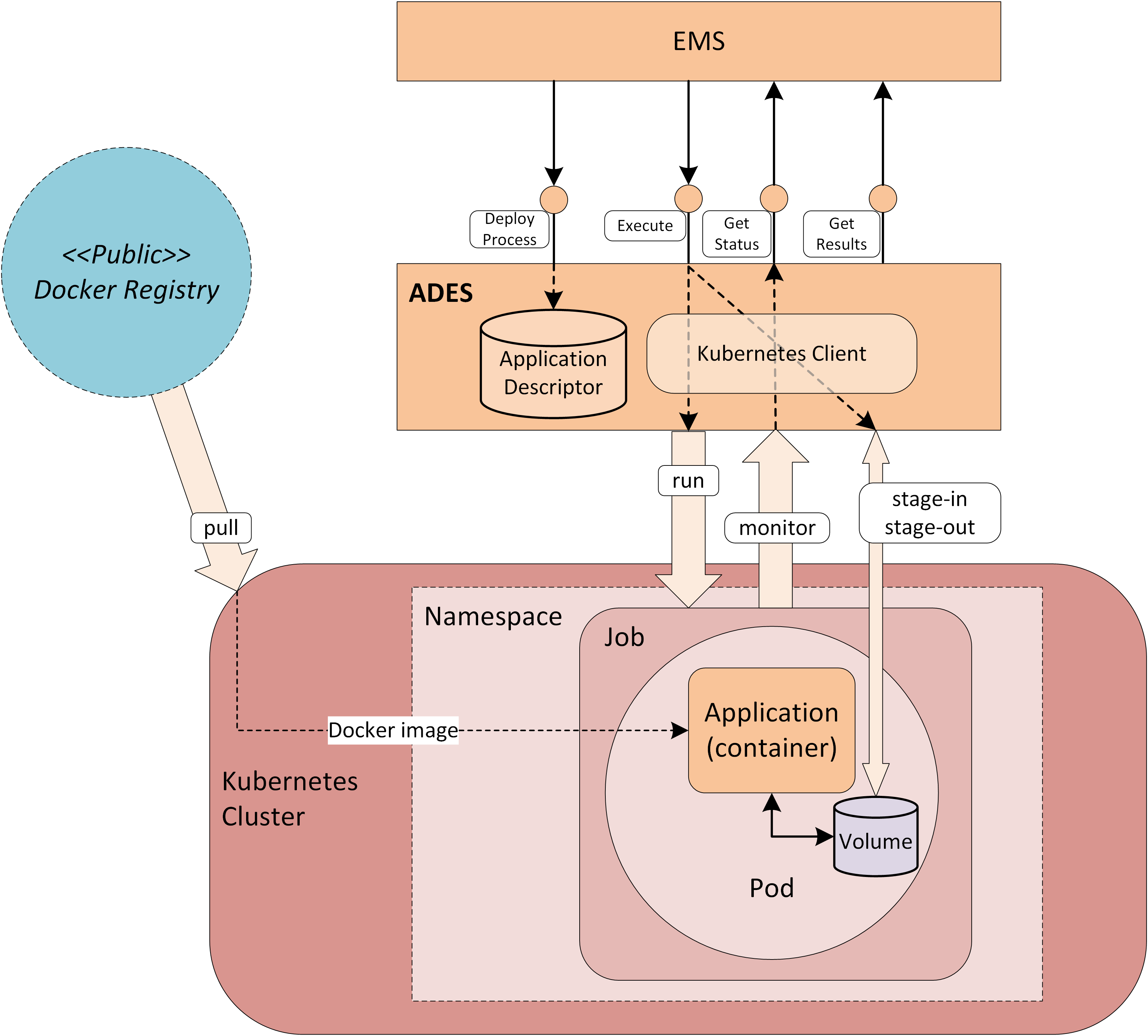

WPS-T (REST/JSON) service that incorporates the Docker execution engine, and is responsible for the execution of the processing service (as a WPS request) within the ‘target’ Exploitation Platform. |

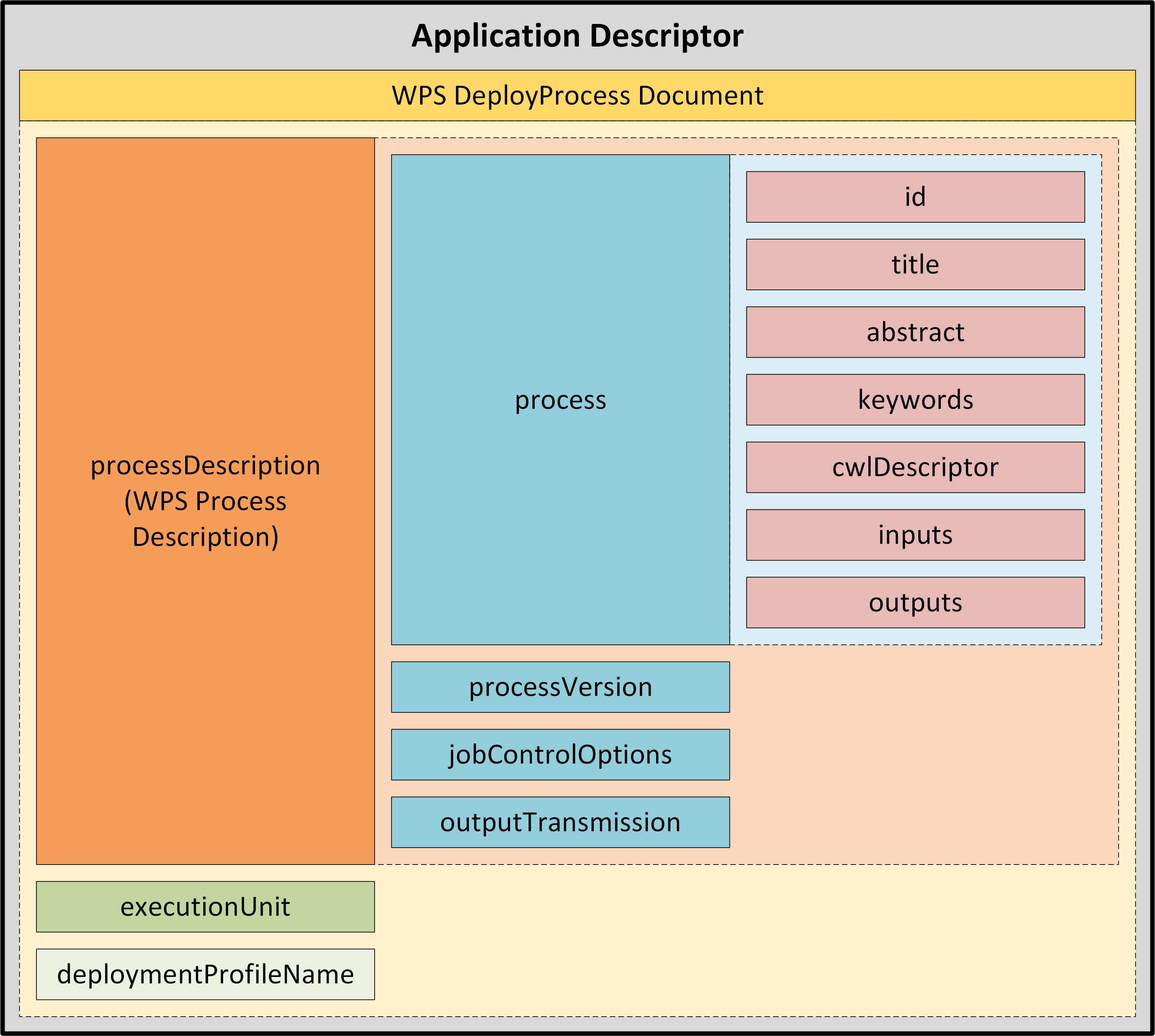

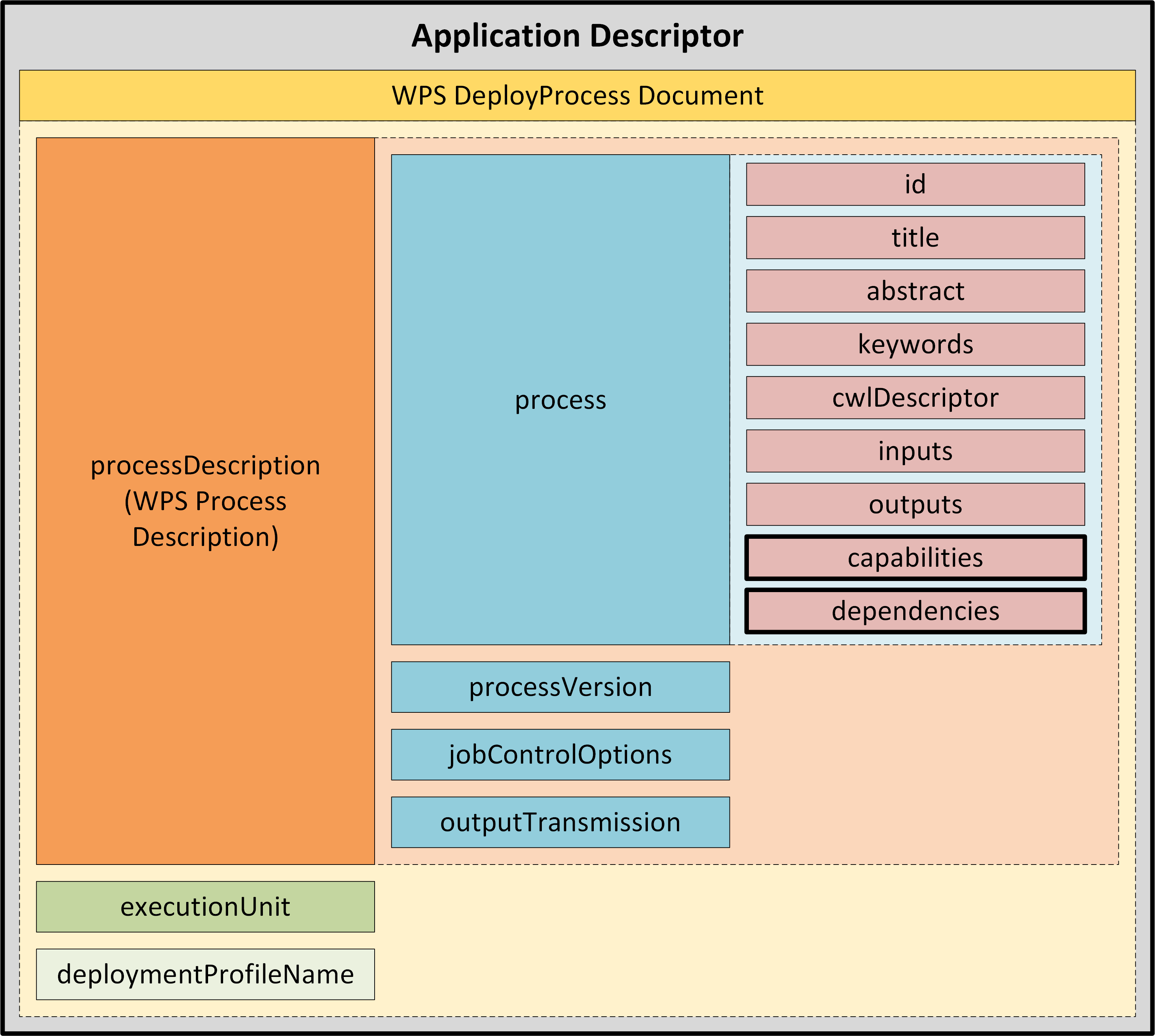

Application Descriptor |

A file that provides the metadata part of the Application Package. Provides all the metadata required to accommodate the processor within the WPS service and make it available for execution. |

Application Package |

A platform independent and self-contained representation of a software item, providing executable, metadata and dependencies such that it can be deployed to and executed within an Exploitation Platform. Comprises the Application Descriptor and the Application Artefact. |

Bulk Processing |

Execution of a Processing Service on large amounts of data specified by AOI and TOI. |

Code |

The codification of an algorithm performed with a given programming language - compiled to Software or directly executed (interpretted) within the platform. |

Compute Platform |

The Platform on which execution occurs (this may differ from the Host or Home platform where federated processing is happening) |

Consumer |

User accessing existing services/products within the EP. Consumers may be scientific/research or commercial, and may or may not be experts of the domain |

Data Access Library |

An abstraction of the interface to the data layer of the resource tier. The library provides bindings for common languages (including python, Javascript) and presents a common object model to the code. |

Development |

The act of building new products/services/applications to be exposed within the platform and made available for users to conduct exploitation activities. Development may be performed inside or outside of the platform. If performed outside, an integration activity will be required to accommodate the developed service so that it is exposed within the platform. |

Discovery |

User finds products/services of interest to them based upon search criteria. |

Execution |

The act to start a Processing Service or an Interactive Application. |

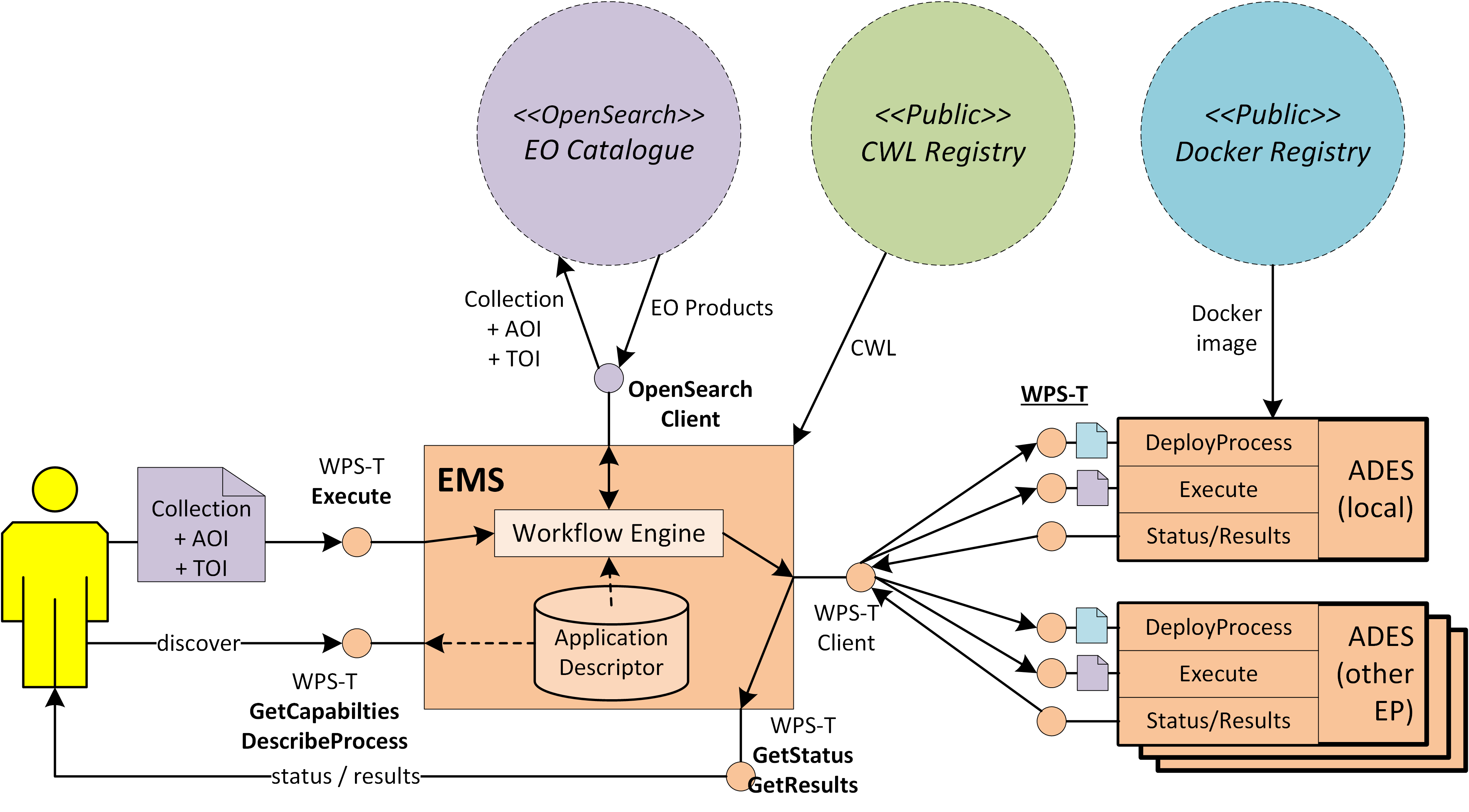

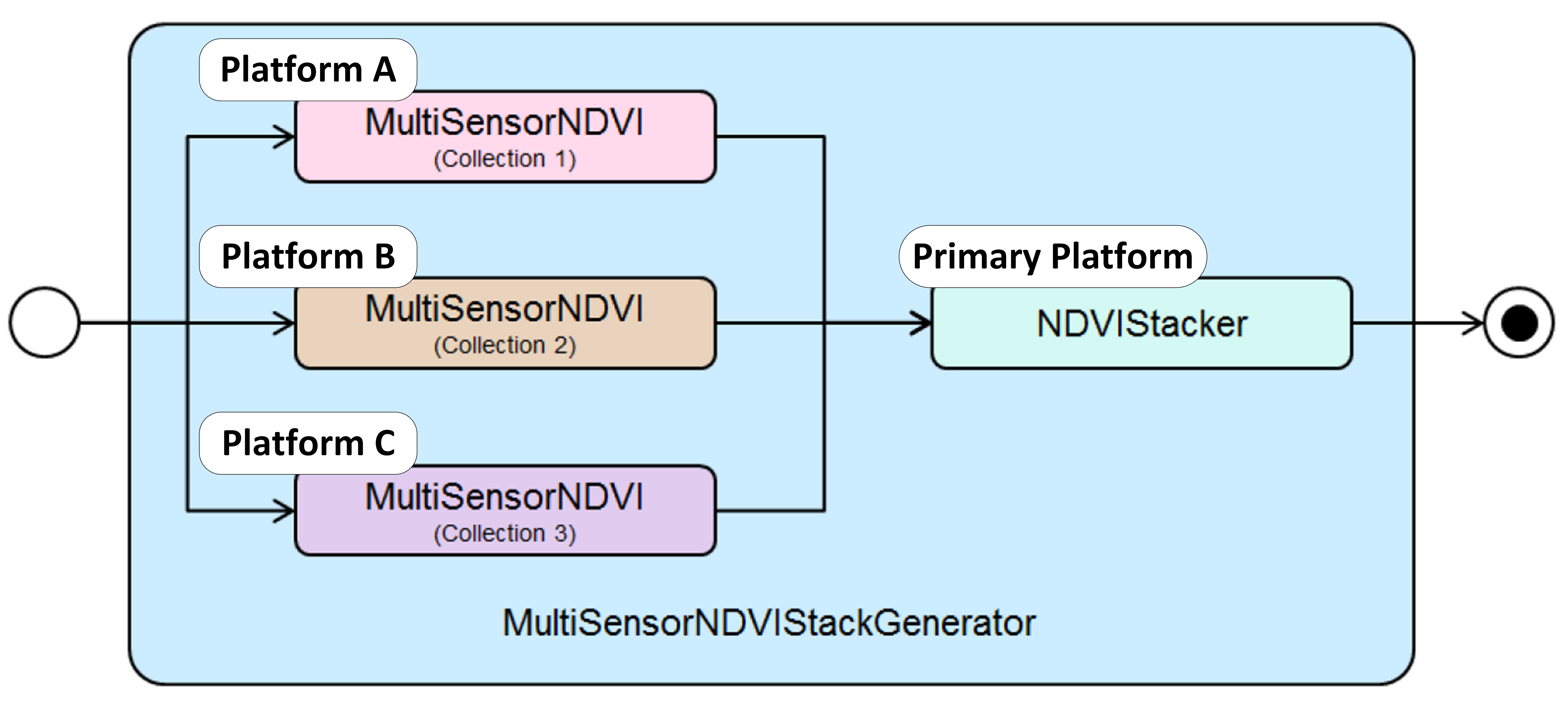

Execution Management Service (EMS) |

The EMS is responsible for the orchestration of workflows, including the possibility of steps running on other (remote) platforms, and the on-demand deployment of processors to local/remote ADES as required. |

Expert |

User developing and integrating added-value to the EP (Scientific Researcher or Service Developer) |

Exploitation Tier |

The Exploitation Tier represents the end-users who exploit the services of the platform to perform analysis, or using high-level applications built-in on top of the platform’s services |

External Application |

An application or script that is developed and executed outside of the Exploitation Platform, but is able to use the data/services of the EP via a programmatic interface (API). |

Guest |

An unregistered User or an unauthenticated Consumer with limited access to the EP’s services |

Home Platform |

The Platform on which a User is based or from which an action was initiated by a User |

Host Platform |

The Platform through which a Resource has been published |

Identity Provider (IdP) |

The source for validating user identity in a federated identity system, (user authentication as a service). |

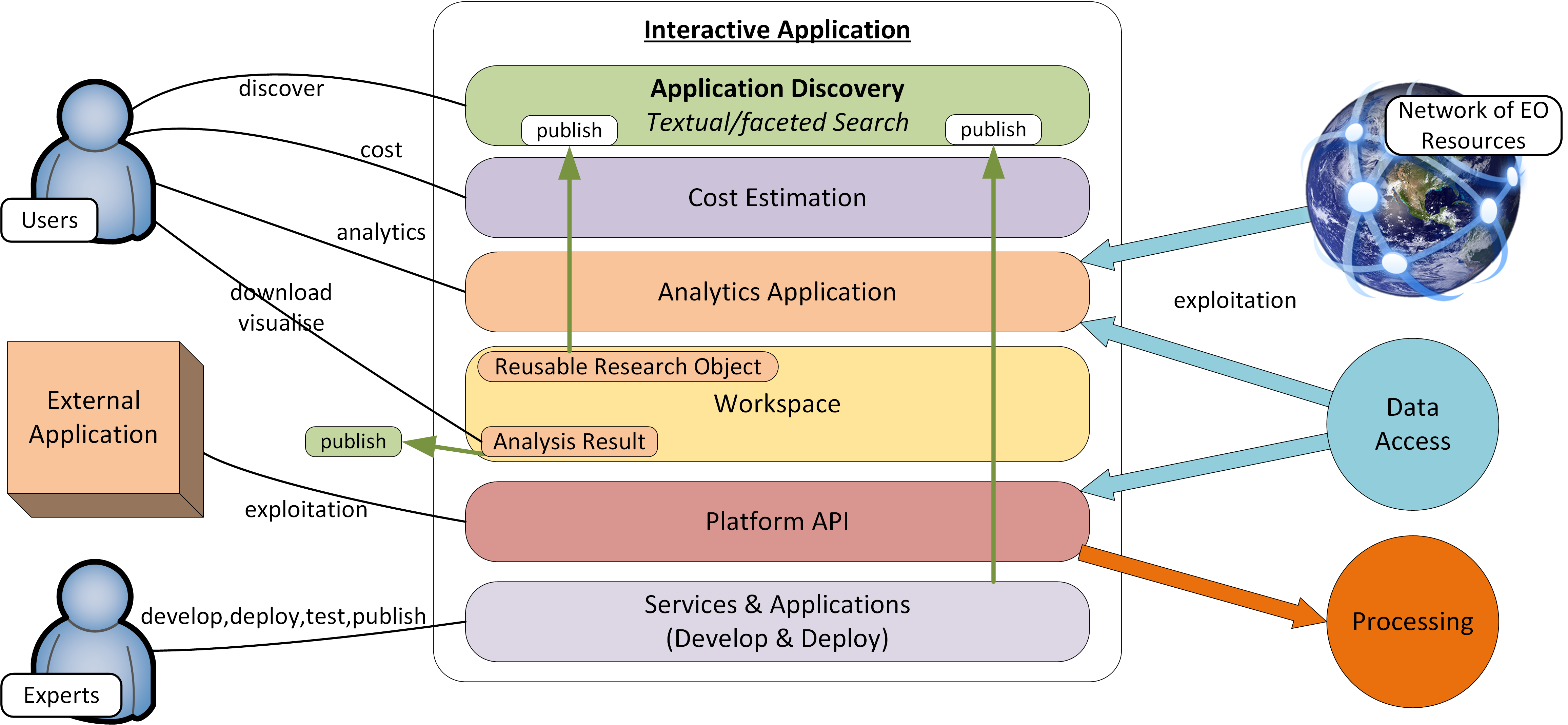

Interactive Application |

A stand-alone application provided within the exploitation platform for on-line hosted processing. Provides an interactive interface through which the user is able to conduct their analysis of the data, producing Analysis Results as output. Interactive Applications include at least the following types: console application, web application (rich browser interface), remote desktop to a hosted VM. |

Interactive Console Application |

A simple Interactive Application for analysis in which a console interface to a platform-hosted terminal is provided to the user. The console interface can be provided through the user’s browser session or through a remote SSH connection. |

Interactive Remote Desktop |

An Interactive Application for analysis provided as a remote desktop session to an OS-session (or directly to a 'native' application) on the exploitation platform. The user will have access to a number of applications within the hosted OS. The remote desktop session is provided through the user’s web browser. |

Interactive Web Application |

An Interactive Application for analysis provided as a rich user interface through the user’s web browser. |

Key-Value Pair |

A key-value pair (KVP) is an abstract data type that includes a group of key identifiers and a set of associated values. Key-value pairs are frequently used in lookup tables, hash tables and configuration files. |

Kubernetes (K8s) |

Container orchestration system for automating application deployment, scaling and management. |

Login Service |

An encapsulation of Authenticated Login provision within the Exploitation Platform context. The Login Service is an OpenID Connect Provider that is used purely for authentication. It acts as a Relying Party in flows with external IdPs to obtain access to the user’s identity. |

Network of EO Resources |

The coordinated collection of European EO resources (platforms, data sources, etc.). |

Object Store |

A computer data storage architecture that manages data as objects. Each object typically includes the data itself, a variable amount of metadata, and a globally unique identifier. |

On-demand Processing Service |

A Processing Service whose execution is initiated directly by the user on an ad-hoc basis. |

Platform (EP) |

An on-line collection of products, services and tools for exploitation of EO data |

Platform Tier |

The Platform Tier represents the Exploitation Platform and the services it offers to end-users |

Processing |

A set of pre-defined activities that interact to achieve a result. For the exploitation platform, comprises on-line processing to derive data products from input data, conducted by a hosted processing service execution. |

Processing Result |

The Products produced as output of a Processing Service execution. |

Processing Service |

A non-interactive data processing that has a well-defined set of input data types, input parameterisation, producing Processing Results with a well-defined output data type. |

Products |

EO data (commercial and non-commercial) and Value-added products and made available through the EP. It is assumed that the Hosting Environment for the EP makes available an existing supply of EO Data |

Resource |

A entity, such as a Product, Processing Service or Interactive Application, which is of interest to a user, is indexed in a catalogue and can be returned as a single meaningful search result |

Resource Tier |

The Resource Tier represents the hosting infrastructure and provides the EO data, storage and compute upon which the exploitation platform is deployed |

Reusable Research Object |

An encapsulation of some research/analysis that describes all aspects required to reproduce the analysis, including data used, processing performed etc. |

Scientific Researcher |

Expert user with the objective to perform scientific research. Having minimal IT knowledge with no desire to acquire it, they want the effort for the translation of their algorithm into a service/product to be minimised by the platform. |

Service Developer |

Expert user with the objective to provide a performing, stable and reliable service/product. Having deeper IT knowledge or a willingness to acquire it, they require deeper access to the platform IT functionalities for optimisation of their algorithm. |

Software |

The compilation of code into a binary program to be executed within the platform on-line computing environment. |

Systematic Processing Service |

A Processing Service whose execution is initiated automatically (on behalf of a user), either according to a schedule (routine) or triggered by an event (e.g. arrival of new data). |

Terms & Conditions (T&Cs) |

The obligations that the user agrees to abide by in regard of usage of products/services of the platform. T&Cs are set by the provider of each product/service. |

Transactional Web Processing Service (WPS-T) |

Transactional extension to WPS that allows adhoc deployment / undeployment of user-provided processors. |

User |

An individual using the EP, of any type (Admin/Consumer/Expert/Guest) |

Value-added products |

Products generated from processing services of the EP (or external processing) and made available through the EP. This includes products uploaded to the EP by users and published for collaborative consumption |

Visualisation |

To obtain a visual representation of any data/products held within the platform - presented to the user within their web browser session. |

Web Coverage Service (WCS) |

OGC standard that provides an open specification for sharing raster datasets on the web. |

Web Coverage Processing Service (WCPS) |

OGC standard that defines a protocol-independent language for the extraction, processing, and analysis of multi-dimentional coverages representing sensor, image, or statistics data. |

Web Feature Service (WFS) |

OGC standard that makes geographic feature data (vector geospatial datasets) available on the web. |

Web Map Service (WMS) |

OGC standard that provides a simple HTTP interface for requesting geo-registered map images from one or more distributed geospatial databases. |

Web Map Tile Service (WMTS) |

OGC standard that provides a simple HTTP interface for requesting map tiles of spatially referenced data using the images with predefined content, extent, and resolution. |

Web Processing Services (WPS) |

OGC standard that defines how a client can request the execution of a process, and how the output from the process is handled. |

Workspace |

A user-scoped 'container' in the EP, in which each user maintains their own links to resources (products and services) that have been collected by a user during their usage of the EP. The workspace acts as the hub for a user’s exploitation activities within the EP |

1.5. Glossary

The following acronyms and abbreviations have been used in this report.

| Term | Definition |

|---|---|

AAI |

Authentication & Authorization Infrastructure |

ABAC |

Attribute Based Access Control |

ADES |

Application Deployment and Execution Service |

ALFA |

Abbreviated Language For Authorization |

AOI |

Area of Interest |

API |

Application Programming Interface |

CMS |

Content Management System |

CWL |

Common Workflow Language |

DAL |

Data Access Library |

EMS |

Execution Management Service |

EO |

Earth Observation |

EP |

Exploitation Platform |

FUSE |

Filesystem in Userspace |

GeoXACML |

Geo-specific extension to the XACML Policy Language |

IAM |

Identity and Access Management |

IdP |

Identity Provider |

JSON |

JavaScript Object Notation |

K8s |

Kubernetes |

KVP |

Key-value Pair |

M2M |

Machine-to-machine |

OGC |

Open Geospatial Consortium |

PDE |

Processor Development Environment |

PDP |

Policy Decision Point |

PEP |

Policy Enforcement Point |

PIP |

Policy Information Point |

RBAC |

Role Based Access Control |

REST |

Representational State Transfer |

SSH |

Secure Shell |

TOI |

Time of Interest |

UMA |

User-Managed Access |

VNC |

Virtual Network Computing |

WCS |

Web Coverage Service |

WCPS |

Web Coverage Processing Service |

WFS |

Web Feature Service |

WMS |

Web Map Service |

WMTS |

Web Map Tile Service |

WPS |

Web Processing Service |

WPS-T |

Transactional Web Processing Service |

XACML |

eXtensible Access Control Markup Language |

2. Context

The Master System Design provides an EO Exploitation Platform architecture that meets the service needs of current and future systems, as defined by the use cases described in [EOEPCA-UC]. These use cases must be explored under 'real world' conditions by engagement with existing deployments, initiatives, user groups, stakeholders and sponsors within the user community and within overlapping communities, in order to gain a fully representative understanding of the functional requirements.

The system design takes into consideration existing precursor architectures (such as the Exploitation Platform Functional Model [EP-FM] and Thematic Exploitation Platform Open Architecture [TEP-OA]), including consideration of state-of-the-art technologies and approaches used by current related projects. The master system design describes functional blocks linked together by agreed standardised interfaces.

The importance of the OGC in these activities is recognised as a reference for the appropriate standards and in providing mechanisms to develop and evolve standards as required in the development of the architecture. In order to meet the design challenges we must apply the applicable existing OGC standards to the full set of federated use cases in order to expose deficiencies and identify needed evolution of the standards. Standards are equally important in all areas of the Exploitation Platform, including topics such as Authentication & Authorization Infrastructure (AAI), containerisation and provisioning of virtual cloud resources to ensure portability of compute between different providers of resource layer.

Data and metadata are fundamental considerations for the creation of an architecture in order to ensure full semantic interoperability between services. In this regard, data modelling and the consideration of data standards are critical activities.

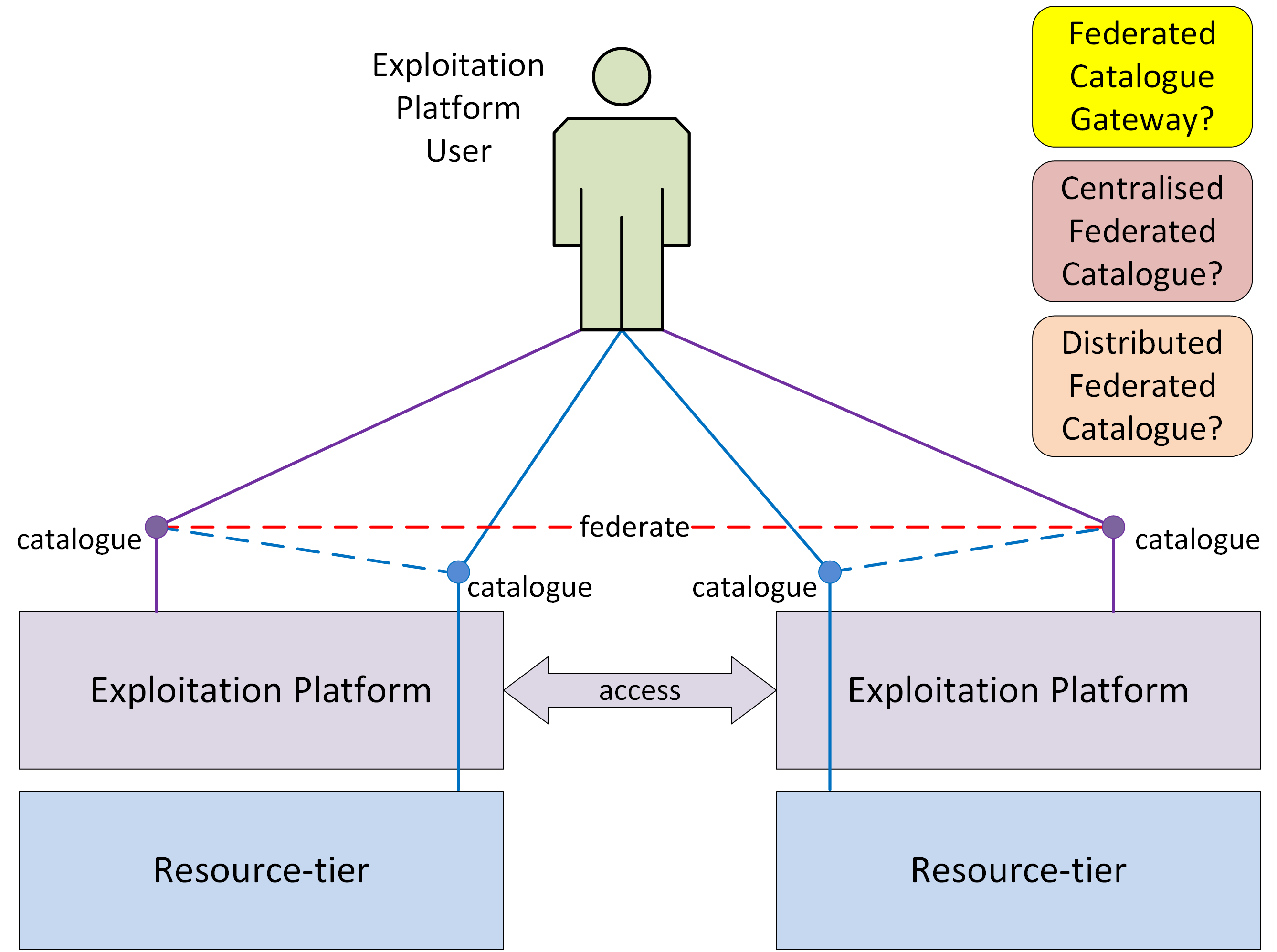

The system design must go beyond the provision of a standalone EO Exploitation Platform, by intrinsically supporting federation of similar EO platforms at appropriate levels of the service stack. The Network of EO Resources seeks, ‘to unite the available - but scattered - European resources in a large federated and open environment’. In such a context, federation provides the potential to greatly enhance the utilization of data and services and provide as stimulus for research and commercial exploitation. From the end-user point of view, the federated system should present itself as a single consolidated environment in which all the federated resources are made available as an integrated system. Thus, the system design must specify federation-level interfaces that support this data and service-level interoperability in such a way that is seamless to the end users.

The goal is to create an Integrated Data Exploitation Environment. Users will apply their workflows close to the hosted data, supplemented by their own data. Processing outputs may be hosted as new products that can themselves contribute to the global catalogue. This paradigm can then be extended to encompass the federated set of Exploitation Platforms within the Network of EO Resources. The result is a Federated, Integrated Data Analysis Environment.

A Reference Implementation of the full architecture will be developed to prove the concepts and also to provide an off-the-shelf solution that can be instantiated by future projects to implement their EO Exploitation Platform, thus facilitating their ability to join the federated Network of EO Resources. Thus, the Reference Implementation can be regarded as a set of re-usable platform services, in the context of a re-usable platform architecture.

3. Design Overview

The overall system design has been considered by taking the ‘Exploitation Platform – Functional Model’ [EP-FM] as a starting point and then evolving these ideas in the context of existing interface standards (with some emphasis on the OGC protocol suite) and the need for federated services.

3.1. Domain Areas

The system architecture is designed to meet the use cases as defined in [EOEPCA-UC] and [EP-FM]. [EOEPCA-UC] makes a high-level analysis of the use-cases to identify the main system functionalities organised into domain areas: 'User Management', 'Processing & Chaining' and 'Resource Management'. The high-level functionalities are often met by more than one domain area, and User Management (specifically Identity & Access Management) cuts across all use cases, and forms the basis of all access control restrictions that are applied to platform services and data.

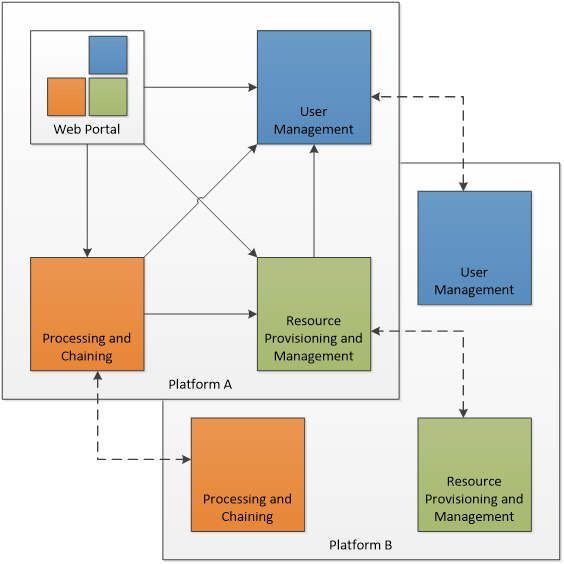

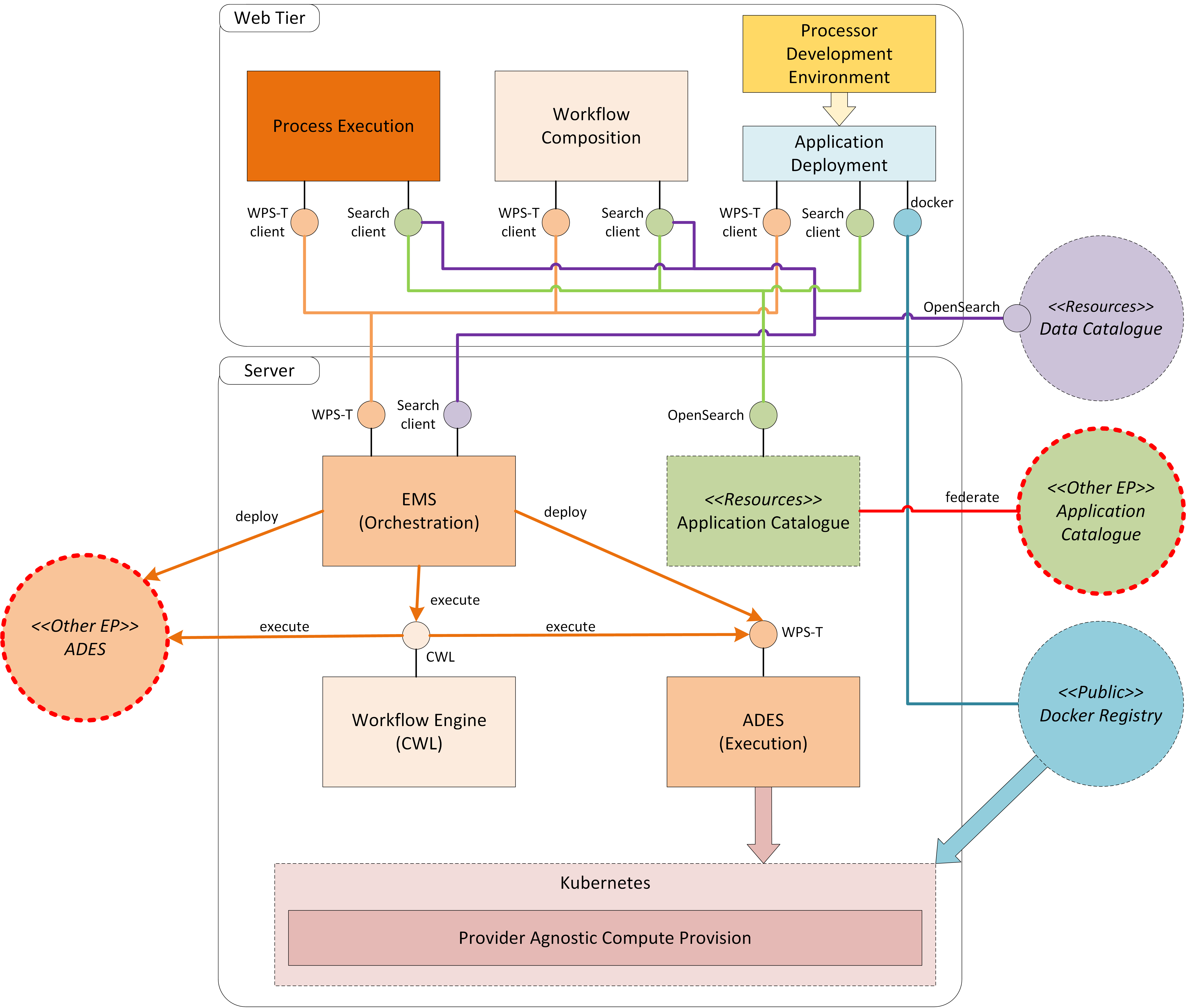

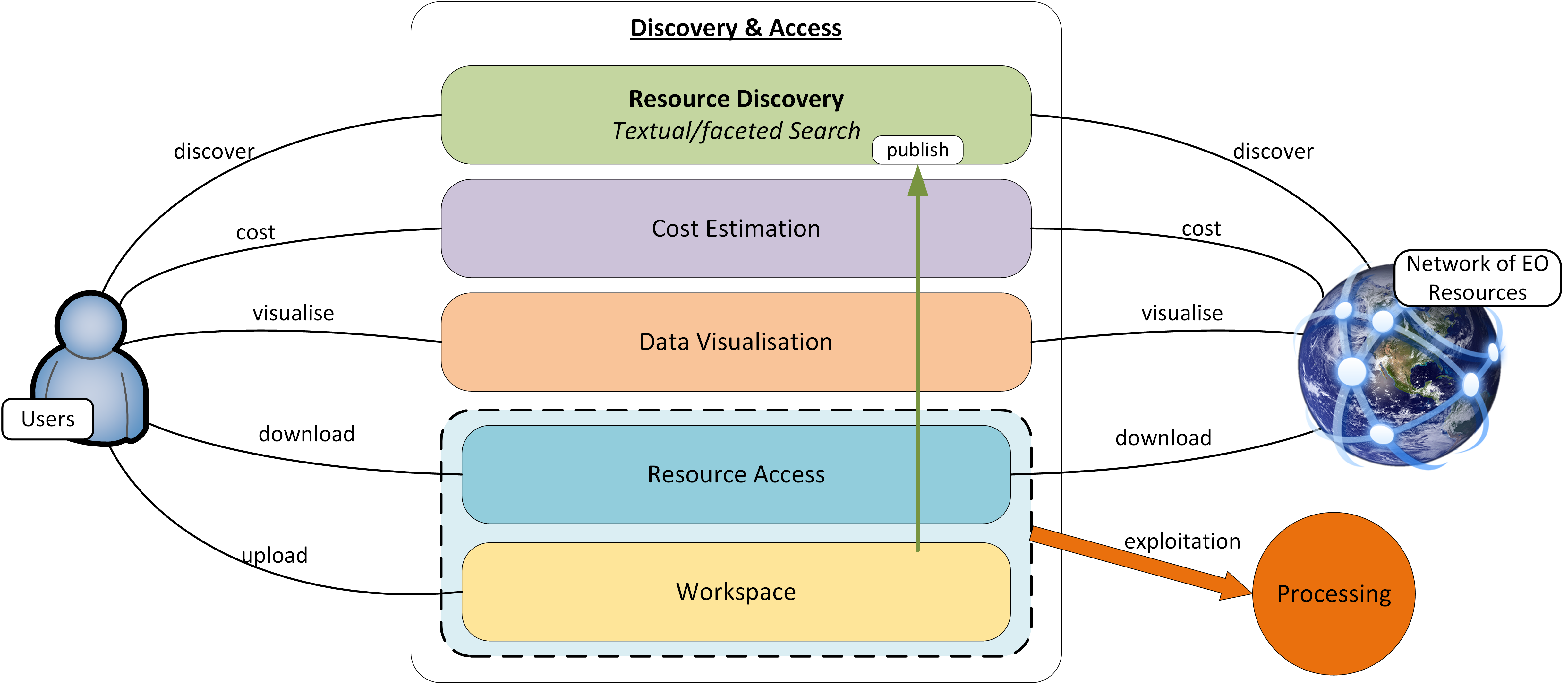

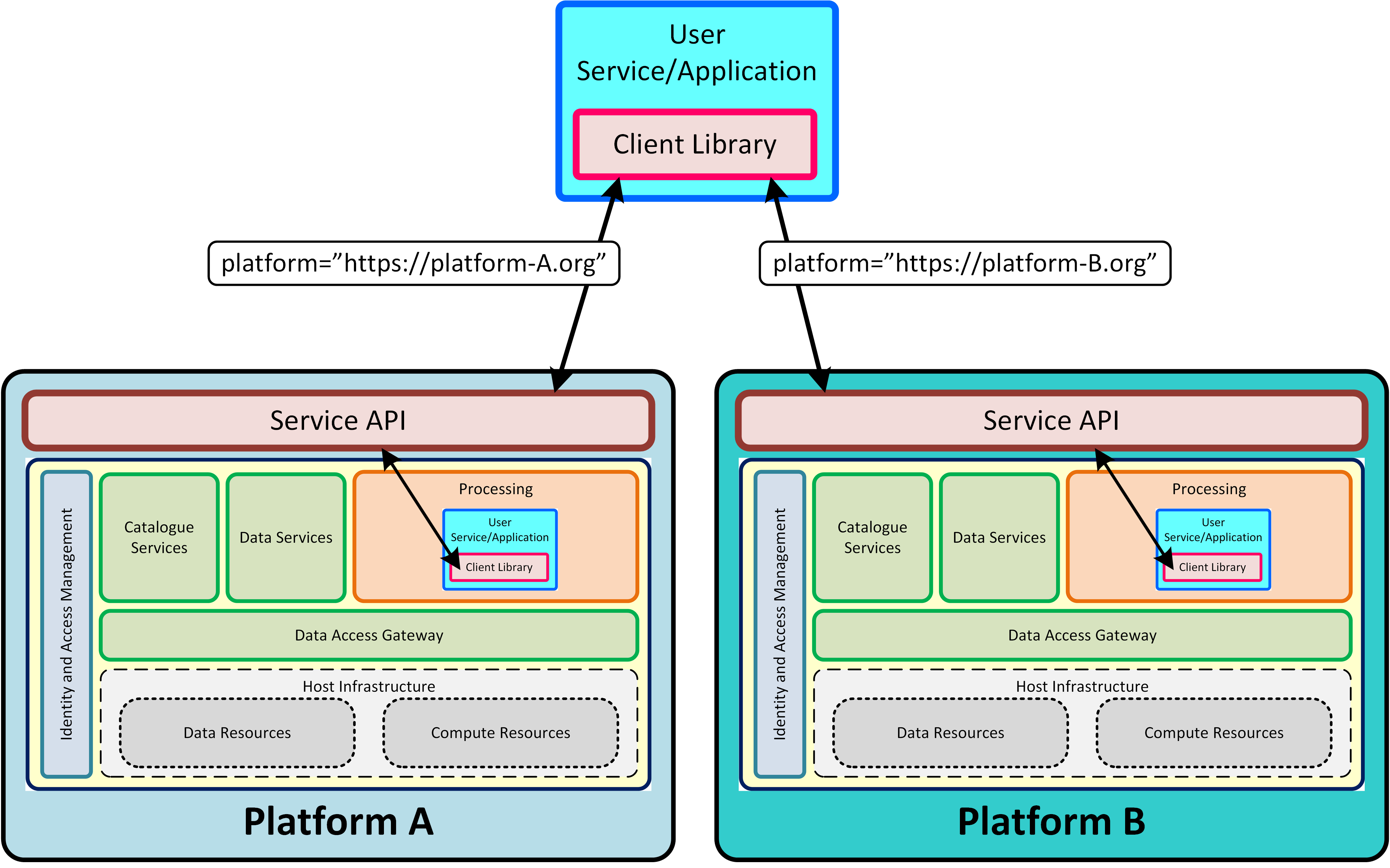

Figure 1 depicts the domain areas as top level component blocks in a Platform ‘A’. The arrows may be read as “uses”, each implying one or more service interfaces.

A potential federation concept is represented by interactions between corresponding blocks in a collaborating Platform ‘B’. The architecture aims to minimise dependencies and is conducive to the principle of subcontracting the implementation to experts in the respective domains. The web portal integrates various client components to form a rich user-facing interface. The Web Portal is depicted as it has interfaces with the other domain areas - but it is not a priority concern for the Common Architecture. Each exploitation platform would be expected to develop its own web interfaces according to its needs.

3.1.1. User Management

Responsible for all aspects related to user identity, authentication, authorization, accounting and billing in a federated system-of-systems environment.

It provides authentication, authorization and accounting services that are required to access other elements of the architecture, including the processing-oriented services and resource-oriented services. Individual resources may have an associated access and/or charging policy, but these have to be checked against the identity of the user. Resource consumption may also be controlled e.g. by credits and/or quotas associated with the user account. In the Network of EO Resources, a user should not need to create an account on multiple platforms. Therefore some interactions will be required between the User Management functions, whether directly or in directly via trusted third party.

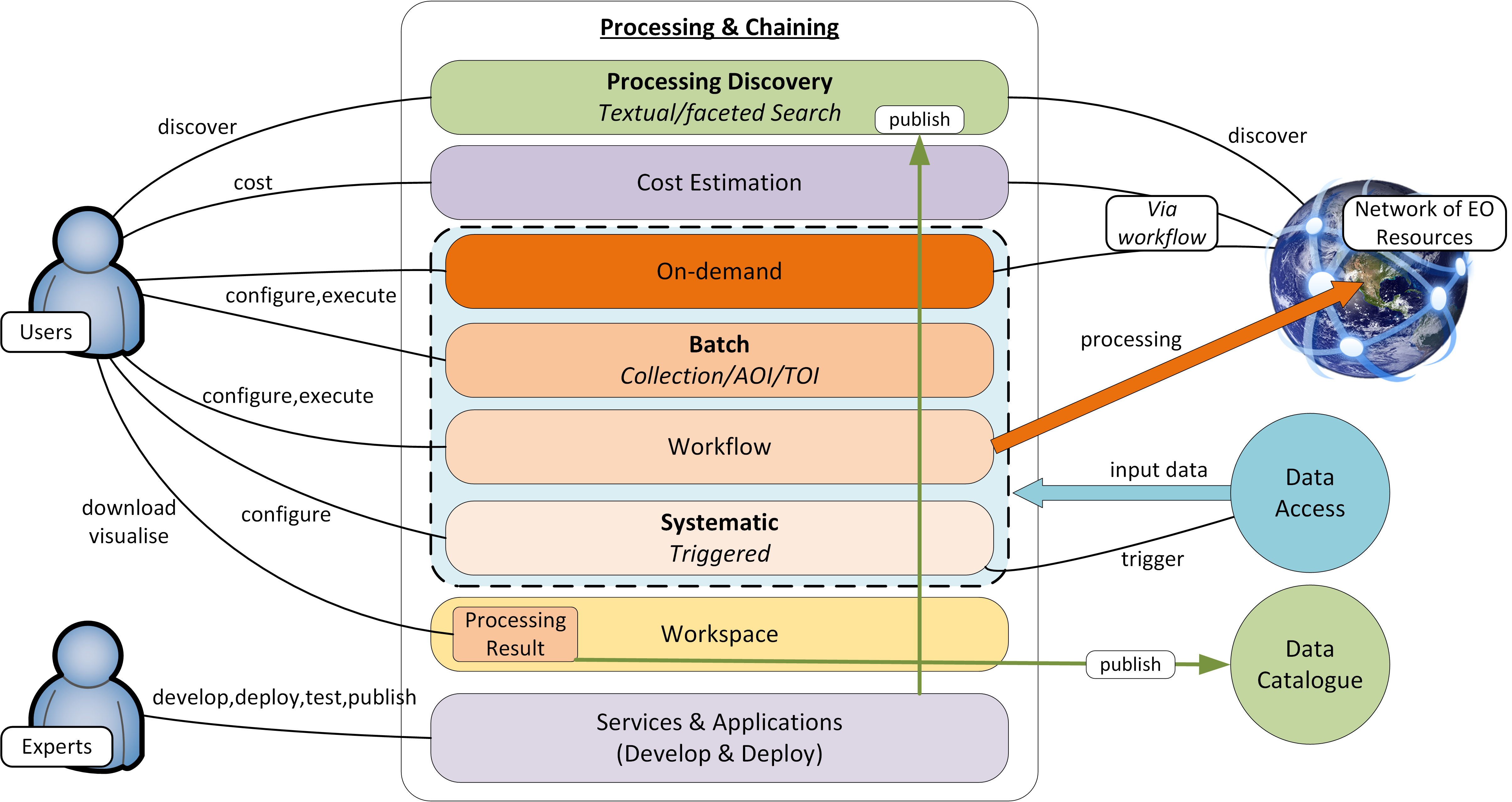

3.1.2. Processing and Chaining

Provides access to a variety of processing functions, tools and applications, as well as execution environments in which to deploy them.

Provides a deployment and execution environment for processing tasks, analysis tools and interactive applications. Supports the chaining of processing tasks in workflows whose execution can include steps executed external to the origin exploitation platform. Handles and abstracts the low-level complexities of the different underlying compute technologies, and ensures the compute layer is scaled in accordance with current demand. Provides an integrated development environment to facilitate development of new processing algorithms and applications. Facilitating the network of EO resources by providing a federated interface to other processing services within the wider EO network.

The development and analysis environment provides a platform for the expert user to develop their own processing chains, experiments and workflows. It integrates with platform catalogue services (for data, processing services and applications) for discovery of available published datasets and processing elements. Subject to appropriate controls and permissions, the user can publish their own processing services and results. Workflows can be executed within the context of the processing facility, with the possibility to execute steps ‘remotely’ in collaborating platforms, with the results being collected for the continuation of the workflow.

3.1.3. Resource Management

Responsible for maintaining an inventory of platform and federated resources, and providing services for data access and visualisation.

Storage and cataloguing of all persistent resources. First and foremost, this will contain multidimensional geo-spatial datasets. In addition it may include a variety of heterogeneous data and other resources, such as documentation, Docker images, processing workflows, etc. Handles and abstracts the low-level complexities of different underlying storage technologies and strategies. Facilitating the network of EO resources by providing a federated interface to other data services within the wider EO network.

The catalogue holds corresponding metadata for every published resource item in the local platform storage, as well as entries for resources that are located on remote collaborating platforms. Catalogue search and data access is provided through a range of standard interfaces, which are used by the local Web Portal and Processing & Chaining elements and may be exposed externally as web services.

Access to services and resources is controlled according to an associated authorization policy as defined by the IAM approach. This component may interact with corresponding peer components on other platforms - for example to synchronise catalogue entries.

The user has a personal workspace in which to upload files, organise links to resources of interest (services/application/data), and receive/manage results output from processing executions. Shared workspaces for collaboration can be similarly provisioned. The ingestion of new data is controlled to ensure the quality of any published resource, including associated metadata, and to maintain the integrity of the catalogue.

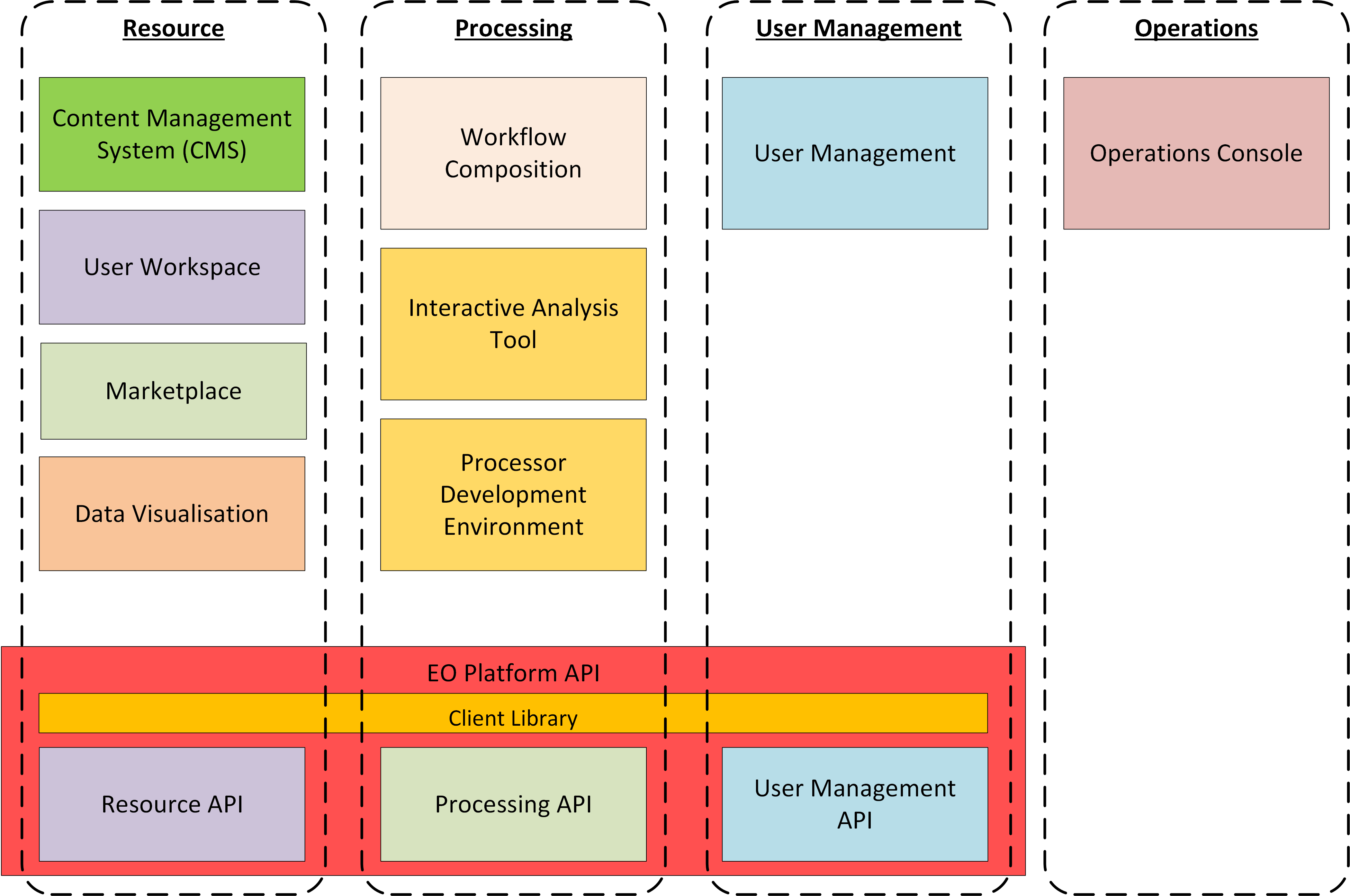

3.1.4. Platform API

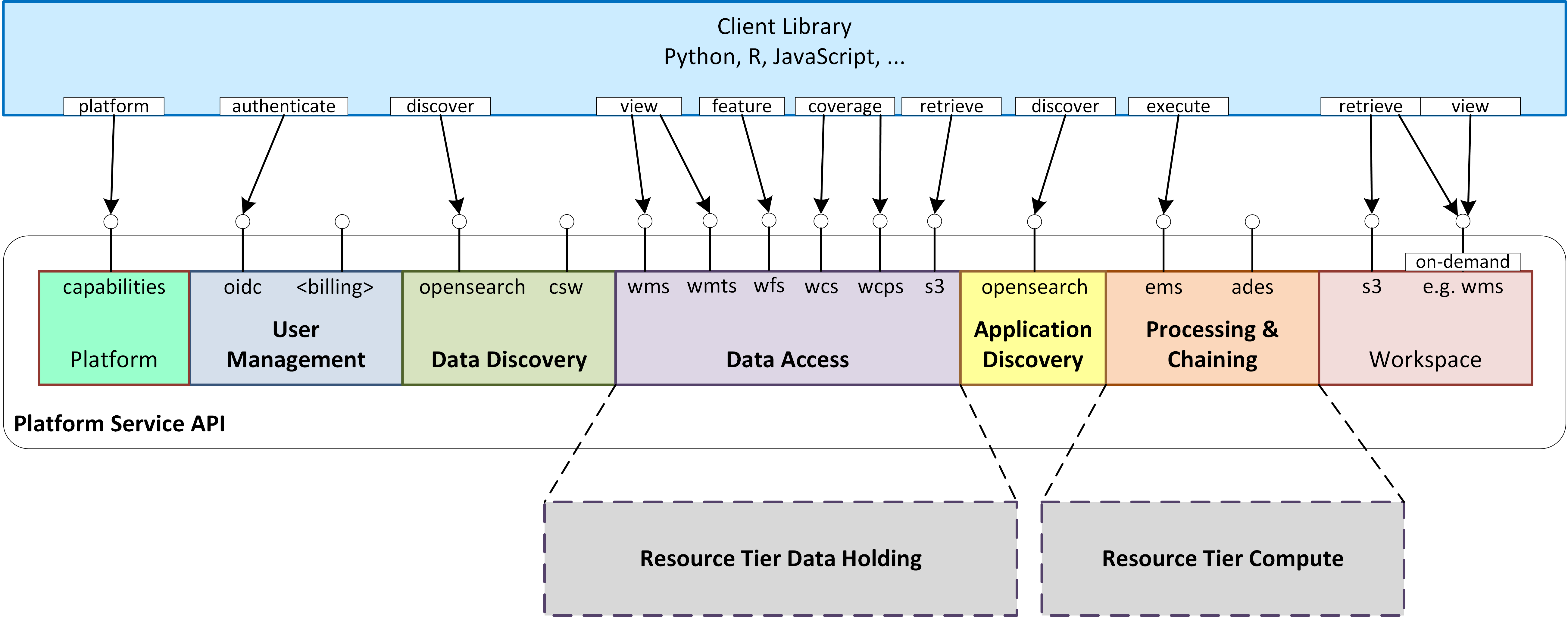

Defines standard interfaces at both service and programmatic levels.

The Service API and its associated Client Library together present a standard platform interface against which analysis and exploitation activities may be developed, and through which platform services can be federated. The Platform API encourages interoperation between platforms and provides a consistent and portable programming paradigm for expert users.

3.1.5. Web Portal

Presents the platform user interface for interacting with the local resources and processing facilities, as well as the wider network of EO resources.

The Web Portal provides the user interface (themed and branded according to the owning organisation) through which the user discovers the data/services available within the platform, and the analysis environment through which they can exploit these resources. It provides a rich, interactive web interface for discovering and working with all kinds of resources, including EO data, processing and documentation. It includes web service clients for smart search and data visualisations. It provides a workspace for developing and deploying processing algorithms, workflows, experiments and applications, and publishing results. It includes support and collaboration tools for the community.

Web Portal integrates together various web service clients that uses services provided by the specialist domains (Processing, Resource, User) on the local platform and collaborating platforms.

3.2. Architecture Layers

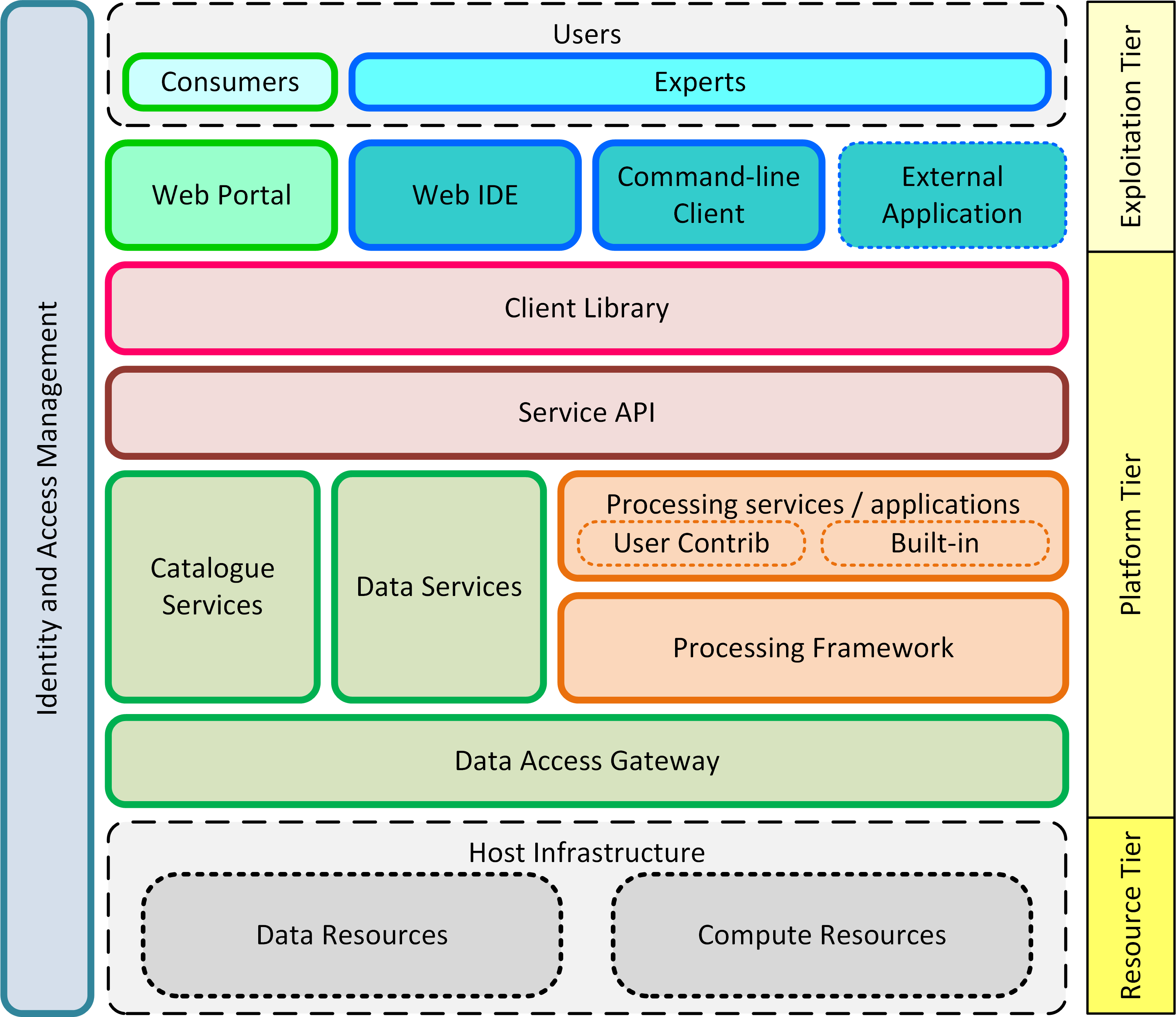

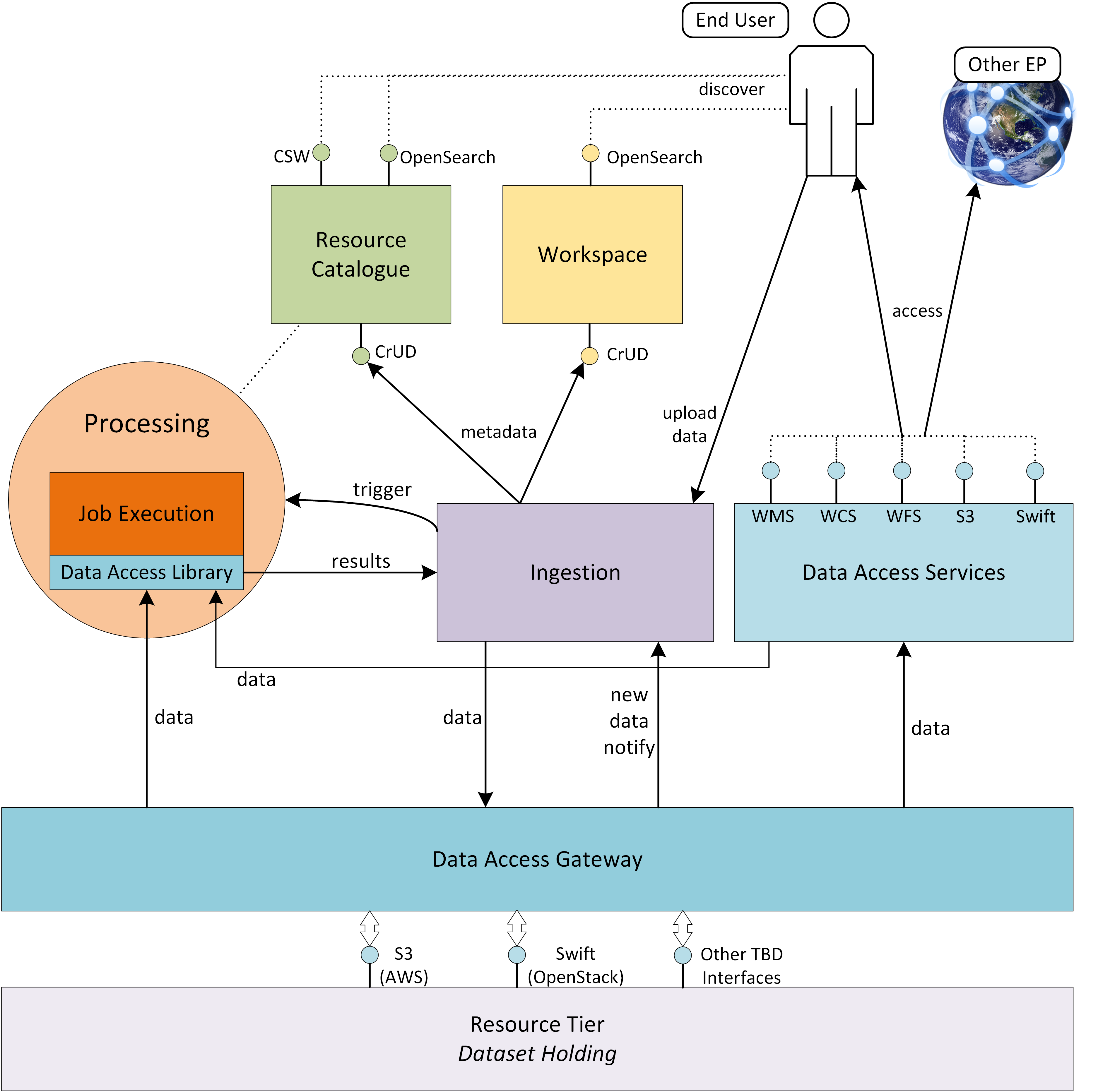

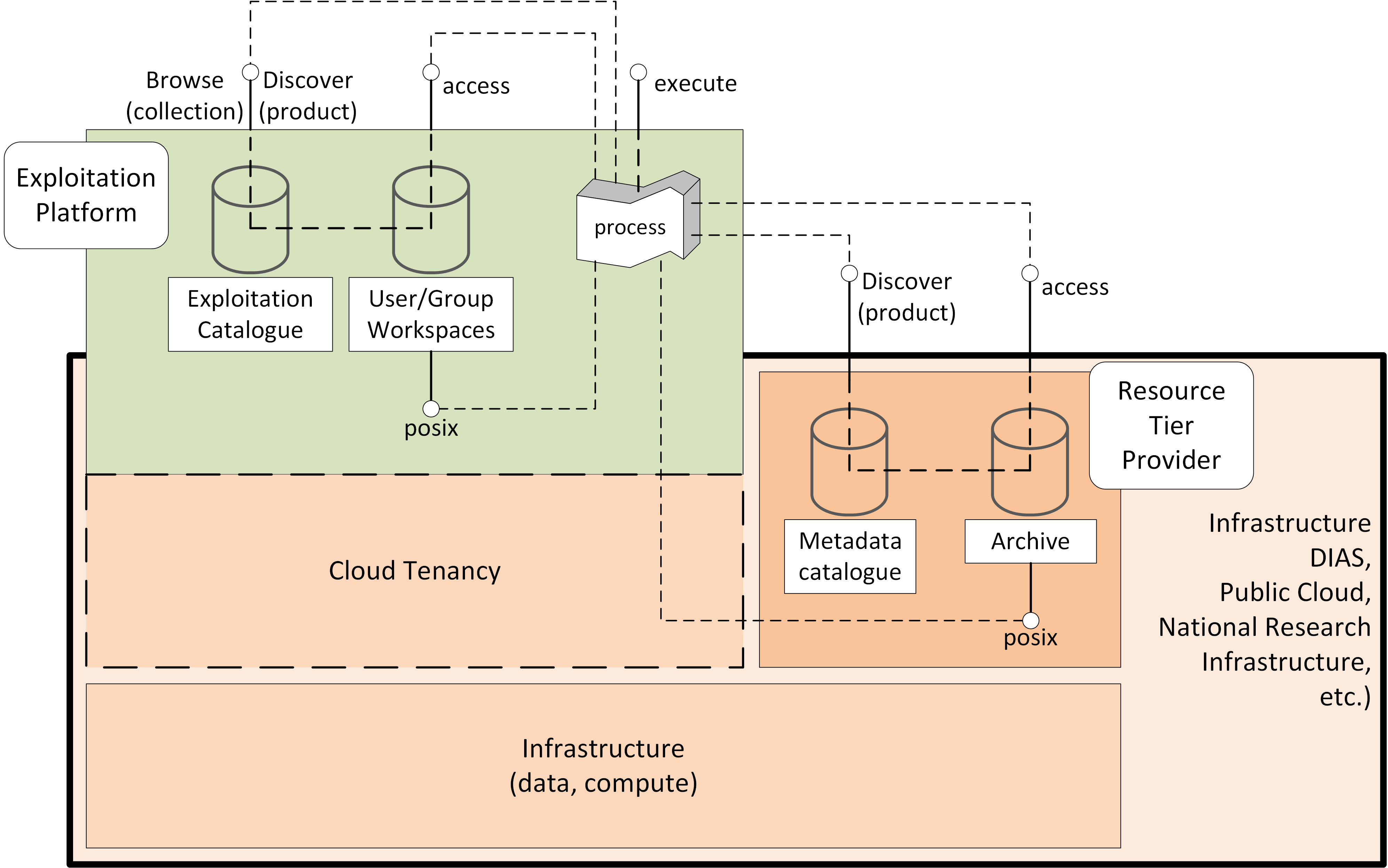

Figure 2 provides a simplified architectural view that illustrates the broad architecture layes of the Exploitation Platform, presented in the context of the infrastructure in which it is hosted and the end-users performing exploitation activities.

- Resource Tier

-

The Resource Tier represents the hosting infrastructure and provides the EO data, storage and compute upon which the exploitation platform is deployed.

- Platform Tier

-

The Platform Tier represents the Exploitation Platform and the services it offers to end-users. The layers comprising the Platform Tier are further described below.

- Exploitation Tier

-

The Exploitation Tier represents the end-users who exploit the services of the platform to perform analysis, or using high-level applications built-in on top of the platform’s services.

The Exploitation Platform builds upon the services provided by the hosting infrastructure - specifically accessing its data holding and using its compute resources. The components providing the EP services are deployed within the compute offering, with additional compute resources being provisioned on-demand to support end-user analysis activities.

The EP’s services access the data resources through a Data Access Gateway that provides an abstraction of the data access interface provided by the resource tier. This abstraction provides a 'standard' data access semantic that can be relied upon by other EP services - thus isolating specific data access concerns of the resource tier to a single EP component.

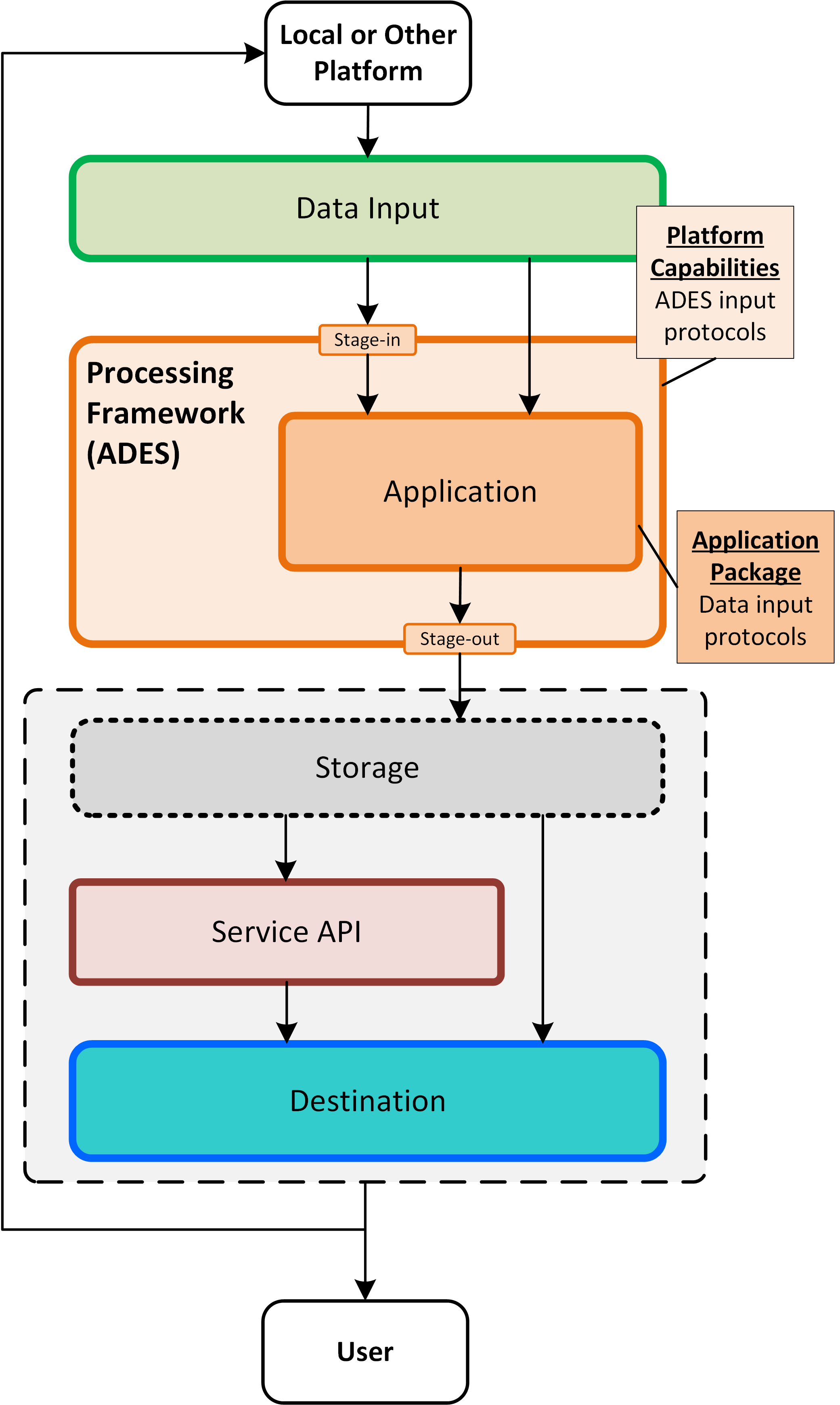

The Processing Framework provides the environment through which processing services and applications are executed in support of end-user analysis activities. It might be envisaged that some built-in (common) processing functions are provided, but the main focus of the processing framework is to support deployment and execution of bespoke end-user processing algorithms, and interactive analysis. Access to the underlying data from the executing processes is marshalled through the Data Access Gateway and its supporting Data Access Library.

The EP provides Catalogue services, so that end-users can discover and browse the resources available in the platform and its federated partners. Thus, end-users can discover available processing services and applications, and search for data available for inclusion in their analysis.

Data Services based upon open standards serve the clients of the Exploitation Platform for data access and data visualisation. Access to the underlying data is made via the Data Access Gateway.

The Service API represents the public service interfaces exposed by the Exploitation Platform for consumption by its clients. Covering all aspects of the EP (authentication, data/processing discovery, processing etc.), these interfaces are based upon open standards and are designed to offer a consistent EP service access semantic within the network of EO resources. Use of the network (HTTP) interfaces of the Service API is facilitated by the Client Library that provides bindings for common languages (Python, R, Javascript). The Client Library is a programmatic representation of the Service API which acts as an abstraction of the Exploitation Platform and so facilitates the development of portable client implementations.

The Exploitation Tier hosts the web clients with which the end-user interacts to conduct their analysis/exploitation activities. These clients would typically utilise the Client Library in their implementation. The Web IDE is an interactive web application that Experts use to perform interactive research and to develop algorithms. The Command-line Client builds upon the Client Library to provide a command-line tool that can be used, for example, to automate EP interactions through scripts.

The Web Portal provides the main user interface for the Exploitation Platform. It would be expected that each platform would provide its own bespoke portal implementation, and so is beyond the scope of the Common Architecture. Nevertheless, the architecture and its service interface must meet the needs anticipated by future exploitation platform implementations. Similarly, the External Application represents web applications (external to the hosting environment of the exploitation platform) that use the services of the EP via its Service API and Client Library.

All user interactions with the services of the EP are executed within the context of a given user and their rights to access resources, with associated resource usage billing. Thus, the Identity and Access Management component covers all tiers in this layered model.

The focus of this design document is the Platform Tier, which is elaborated in subsequent sections of the document:

- Section User Management

-

This section adresses the main concerns of User Management which are user identity, access to resources and billing for resource usage.

- Section Processing and Chaining

-

This section covers application packaging and the Processing Framework through which services/applications can be deployed in federated workflows.

- Section Resource Management

-

This section covers resource discovery through catalogues that act as a marketplace for data, services and applications. Resource Management ensures data is accessible through standard interfaces that serve the processing framework, and public data services to visualise and consume platform data.

- Section Platform API

-

This section provides a consolidated decription of the service interface of the EP and its associated client library, which together present a standard platform interface against which analysis and exploitation activities may be developed, and through which platform services can be federated.

4. User Management

4.1. Functional Overview

In the context of the Common Architecture, User Management covers the following main functional areas:

- Identity and Access Management (IAM)

-

Identification/authentication of users and authorization of access to protected resources (data/services) within the EP.

- Accounting and Billing

-

Maintaining an accounting record of all user accesses to data/compute/services/applications, supported by appropriate systems of credits and billing.

- User Profile

-

Maintenance of details associated to the user that may be needed in support of access management and billing.

These are explored in the following sub-sections.

|

Work In Progress

This section focuses on the WHAT functionality the design is meant to enable (tells the story)

|

4.1.1. Identity and Access Management (IAM)

The solution for IAM is driven by the need for Federated Identity and Authorization in the context of a network of collaborating exploitation platforms and connected services. This federated environment should facilitate an end-user experience in which they can use a single identity across collaborating platforms (Single Sign-On), they can bring their own existing identity to the platforms ('Login With' service), and platforms can access the federated services of other platforms on behalf of the end-user (delegated access and authorization).

The goal of IAM is to uniquely identify the user and limit access to protected resources to those having suitable access rights. We assume an Attribute Based Access Control (ABAC) approach in which authorization decisions are made based upon access policies/rules that define attributes required by resources and possessed (as claims) by users. ABAC is is seen as a more flexible approach than Role Base Access Control (RBAC), affording the ability to express more sophisticated authorizations rules beyond the role(s) of the user - and noting the fact that a role-based ruleset could be implemented within an attribute based approach, (i.e. RBAC is a subset/specialisation of ABAC).

User Management achieves this through:

-

Unique user identification

-

Access policy assignment to any given resource

-

Using the access policy, determine:

-

The set of attributes required to access the protected resource

-

whether the user has the required attributes

-

For the Common Architecture, a separation of User Identification from Access Management is established. User identity is federated and handled external to the platform. Within the Network of EO Resources, resources held within an exploitation platform are made available to federated partner platforms. Authorization policy is enforced within the platform at point of access, but the access policy can be federated within the network of EO resources, leading to a system of federated authorization.

-

The identity is provided externally. The external IdP has no association to the exploitation platform, and hence is not the appropriate place to administer attributes that relate to EP resources

-

The protected resources are under the custodianship of the exploitation platform and hence the exploitation platform enforces the access policy decision

-

The administrative domain for an access policy should should not be tied to an exploitation platform, which facilitates the provision of federation and virtual organisations

Federation of services between exploitation platforms is an important goal of the Common Architecture. Thus, the IAM design offers an approach through which user access is managed between platforms, ensuring proper enforcement of access controls and billing.

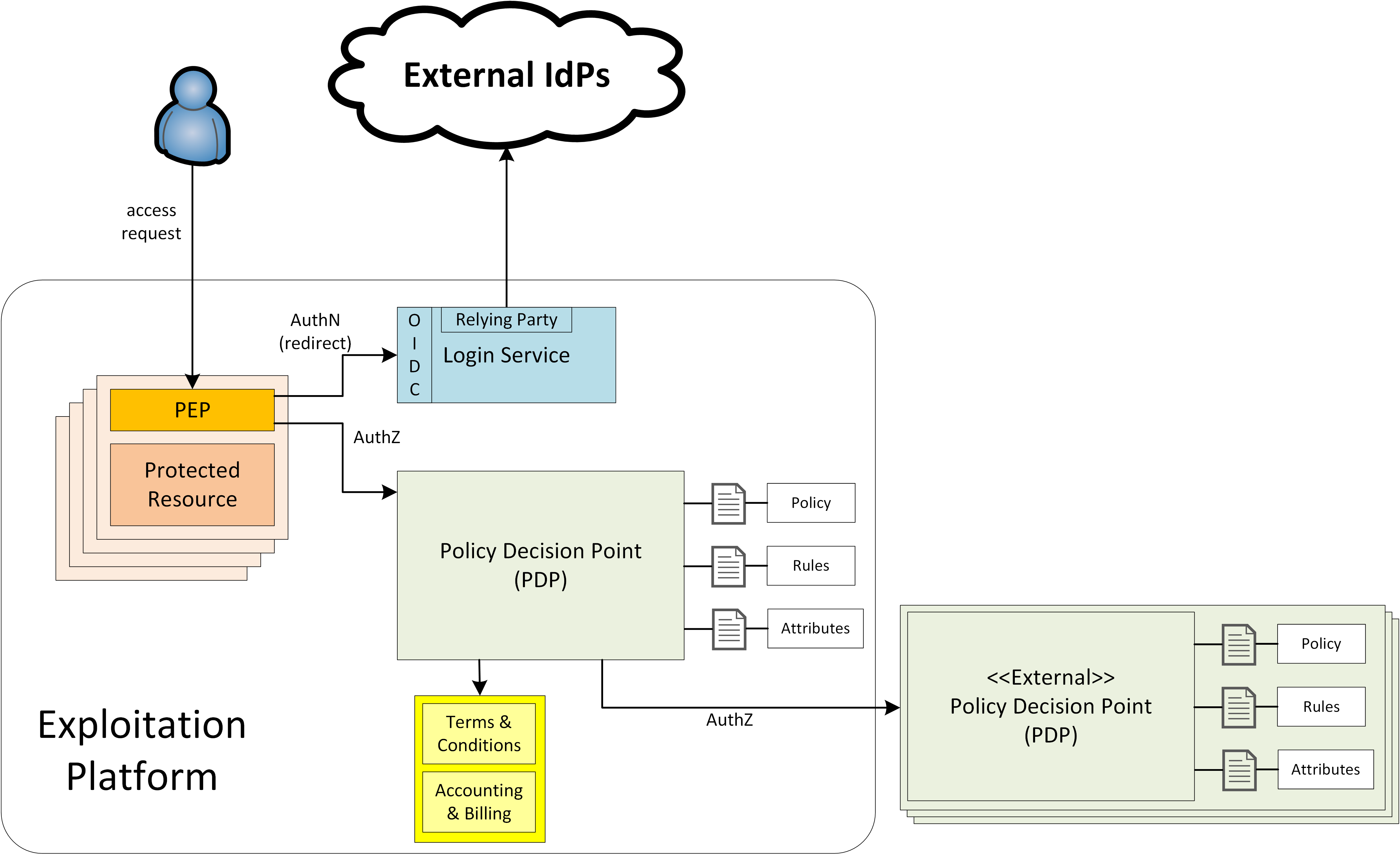

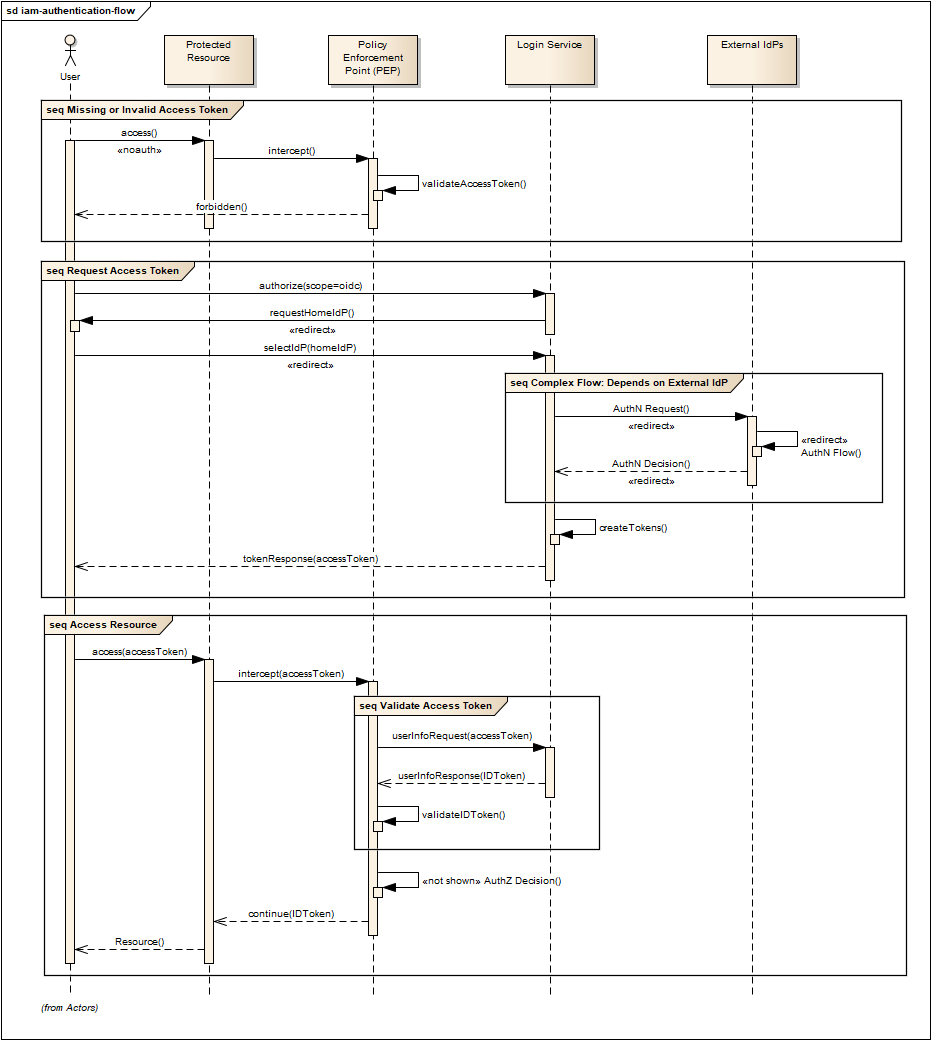

Figure 3 presents the basic approach. At this stage it does not consider the case in which an exploitation platform accesses resources in another platform on behalf of a user, (for example a workflow step that is invoked on another platform). This is addressed in a later section. Users are authenticated by redirection to an external identity provider, (their ‘home’ IdP). This returns the authentication decision and some basic user information as required (such as name, email, etc.).

Each protected resource is fronted by its Policy Enforcement Point (PEP), which acts as filter that will only permit access if the appropriate conditions are met. This decision is made according to a set of rules that are under the control of and configured within the exploitation platform.

|

UMA Compliance

This section of text regarding PEP<→PDP interactions does not comply entirely with the approach stated by the UMA Flow |

The Login Service is provided as a common component that is utilised by each client application to perform the authentication flow with the external IdPs, as a step prior to resource access. In the case of an unauthenticated request that requires authentication, the PEP will initiate the Login Service by redirection of the User’s originating request. The successful flow ultimately redirects back to the PEP and so maintains the direct connection between the end-user agent and the resource server. An alternative approach would be the use of an API Gateway to perform the role of the PEP, acting as an intermediary between the end-user agent and the resource server. However, this would have the effect of proxying the connection which can have an impact on data transfer performance, which is of particular importance in the case of significant data volumes being returned to the User.

The PEP interrogates the PDP for an authorization decision. The PEP sends a request that indicates the pertinent details of the attempted access, including:

-

Identity of end-user (subject)

-

The API (path/version etc.) being accessed (resource)

-

The operation (HTTP verb) being performed (action)

The Policy Decision Point (PDP) returns an authorization decision based upon details provided in the request, and the applicable authorization policy. The authorization policy may delegate all or part of the decision to external PDP(s) within the federated network. This represents a Federated Authorization model and facilitates a model of shared resources and virtual organisations.

The authorization policy defines a set of rules and how they should be evaluated to determine the policy decision. The rules are expressed through attributes. The policy is evaluated to determine what attributes are required, and what attributes the user possesses. This evaluation extends through external PDPs according to any federated authorization defined in the policy.

It should be additionally noted that the decision to allow the user access depends upon dynamic 'attributes', such as whether the user has enough credits to 'pay' for their usage, or whether they have accepted the necessary Terms & Conditions for a given dataset or service. Thus, the PDP must interrogate other EP-services such as 'Accounting & Billing' and 'User Profile' to answer such questions.

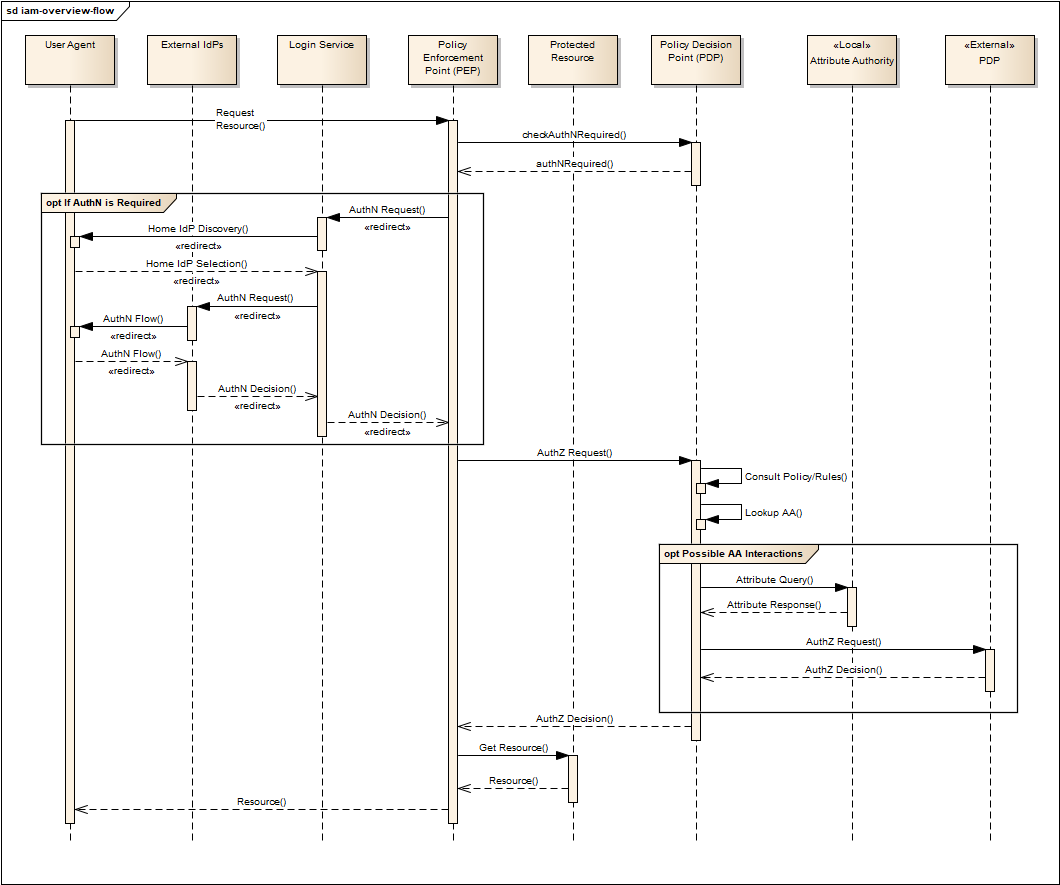

Figure 4 provides an overview of the IAM Flow, (success case).

Flows marked <<redirect>> should be interpreted as flows between services that are made by redirection through the User Agent. For brevity, the interface between the Login Service, the User Agent and the External IdPs is simplified in Figure 4 - they are expanded in section Authentication. It should also be noted that the flows with the External IdP will vary according to the protocol required by the External IdP, (e.g. OAuth, SAML, etc.).

4.1.2. Authentication

The approach to user identity and authentication centres around the use of OpenID Connect. Each Exploitation Platform maintains their own OIDC Provider through which tokens can be issued to permit access to protected resources within the EP. The authentication itself is delegated to external Identity Providers at the preference of the end-user wishing to reuse their existing identity provision.

Authenticated Identity

The Login Service is an OpenID Connect Provider that provides a ‘Login With’ service that allows the platform to support multiple external identity providers. The Login Service acts as a Relying Party in its interactions with the external IdPs to establish the authenticated identity of the user through delegated authentication.

The Login Service presents an OIDC Provider interface to its clients, through which the OIDC clients can obtain Access Tokens to resources. The access tokens are presented by the clients in their requests to resource servers (intercepted by PEP). The PEP (acting on behalf of the resource server) relies upon the access token to establish the authenticated identity of the users making the requests. Once the user identity is established, then the PEP can continue with its policy decision (deferred to the PDP).

Thus, clients of the EP act as OIDC Clients in order to authenticate their users to the platform, before invoking its services. Clients include the web applications that provide the UI of the exploitation platform, as well as other external applications/systems (including other exploitation platforms) wishing to use the services of the EP.

The Login Service acts as client (Relying Party) to each of the External IdPs to be supported and offered as a ‘Login With’ option. The interface/flow with the External IdP is integrated into the OIDC flow implemented by the Login Service. This includes prompting the user to discover their ‘home’ Identity provider. The interactions with the external IdP represent the ‘user authentication step’ within the OIDC flows. Completion of a successful authentication with the external IdP allows the Login Service to issue the requested access tokens (depending on the flow used).

Figure 5 illustrates the basic user access flow, invoked through a web browser.

Federated User Access

Based upon the above authentication model, an EP could access the resources of another EP by obtaining an access token through OIDC flows. However, considering that these EP→EP invocations will typically be Machine-to-machine (M2M), then we need to consider how the end-user (resource owner) is able to complete their consent. The User Management provides two possibilities:

-

The user pre-authorizes the EP→EP access in advance of the operation

-

Use of OIDC JWKS for trusted federation of identity between platforms

User Pre-authorization

Using the facilities of the Exploitation Platform, the user (perhaps via their User Profile management console) initiates the authorization flow from one EP to another. The end result is that the originating EP obtains delegated access to another EP on behalf of the user - with the resulting access tokens being maintained within the user’s profile on the EP.

At the point where the EP needs to access a resource on another EP, then the access tokens are obtained from the user’s profile and used as Bearer token in the resource request to the other EP. Refresh tokens can be used to ensure that authorization is long-lived.

Conversly, the user’s profile at a given EP also provides the ability to manage any inward authrosations they have granted to other EPs, i.e. ability to revoke a previous authorization by invalidating the refresh token.

Possible use of OIDC JWKS Federation

OIDC provides a framework in which RPs and OPs can dynamically establish verifiable trust chains, and so share keys to support signing and validation of JWTs.

Dedicated ‘federation’ endpoints are defined that allow an entity (such as RP or OP) to publish their own Entity Statements, and to obtain Statements for other entities that are issued by trusted third-parties within the federation. The metadata/signatures within the Entity Statements establish a chain of trust that can be followed to known (trusted) Trust Anchors, and so the Entity Statements and the included entity public keys can be trusted.

Thus, through this mechanism public keys can be shared to underpin the signing and validation of JWTs.

Within an EP, when a resource server is executing a user’s request, it may need to invoke a resource in another EP with which it is collaborating. The resource access to the other EP must be made on behalf of the originating user.

The nominal solution is for the originating EP to act as an OIDC Client to interface with the Login Service of the other EP, and so obtain the access token required to access the other resource. In this case, it is possible that the resource access may be asynchronous to the end-user request and is not made within the context of the end-user’s user agent.

OpenID Connect allows the use of the signed-JWT ID Token that can be carried through the calls into and across resource servers. Through the facilities provided by JSON Web Key Set (JWKS), ID Tokens can be verified and trusted by other platforms operating within the same JWKS key hierarchy.

Thus, using the trusted ID Token, it may be possible follow an OIDC/OAuth flow from one EP to another, in which the user is deemed to have a-priori authorized the third-party access. At this point it is only the user’s identity that has been established, with the authorization decision subject to the rules of the PDP/PEP of the remote system. The identified user could have appropriate a-priori permissions (attributes) on the target resources to be granted access, (ref. ‘Federated Attributes’) but, in case these are not considered sensible information, they can be provided within the ID Token statement, therefore facilitating Authorization.

Thus, it is the ID of the user that has been passed machine-to-machine to facilitate the service federation. This effectively achieves cross-EP single sign-on, without relying upon the user agent of the end-user providing cookies to the other EP.

4.1.3. Authorization

|

Work In Progress

The Authorization aspects of the User Management Task should be fleshed out. Main topics include:

|

The Authorization capabilities of an Exploitation Platform allow the End-Users and Resource Owners to interact with the various Resource Servers deployed within the realm of the Platform. The Exploitation Platform provides several capabilities to protect resources and authorize access attempts directed at them

4.1.3.1. Access Policy Checks

At any point during the consumption of Platform resources, components or End-Users might require to perform Policy Checks to verify access rights to a specific resource. The platform provides an endpoint where requests can be made, referencing which End-User (if any) is accessing the resource, and the resource unique identifier.

The platform performs all the policy checks associated with the uniquely identified resource, and answers back with a "Permit" or "Deny" response.

4.1.3.2. Resource Protection Management

The Platform provides Resource Owners with the capability to define their own resource references, which can later be assigned specific access policies on demand. Resource definition, deletion and update operations allow the Platform to uniquely identify which resource is being accessed and allow both accounting operations and the ability to pull all relevant policies to be checked during an access attempt.

Resource Protection Management is achieved through the Resource API, as defined in section Policy API.

4.1.3.3. Access Policy Management

Resource Owners can utilize Platform management endpoints to declare specific access policies in the form of Policy Documents. These documents are stored in the Platform and contain references to uniquely identified resources. Policies enable the usage of a wide variety of access constraint types:

-

Based on Ownership: Only the Resource Owner can access the resource being protected.

-

Based on Access List: Only a pre-defined list of Platform users can access the resource.

-

Based on Attributes: Only users with an attribute set to an specific value or set of values can access the resource.

-

Based on Time Windows: Only requests within the specified time window can be executed

-

Etc.

4.1.3.4. End-User Context Propagation

After successful authorization of an End-User access request to a resource, Resource Servers can choose to extract a minimal set of End-User information that provides context to the request. This serves the purpose of enabling propagation of secondary requests necessary to correctly execute the original one, allowing authorization enforcement of these secondary requests, and tracing them back to the End-User as original "driver" of these.

|

Example

An End-User might execute a processing request that in turn requires the processing environment to pull a remote dataset. This secondary action of retrieving data happens behind the scenes but also requires user authorization. The processing environment can choose to pull the End-User context from the original request and propagate it to this required dataset request for authorization purposes. |

4.1.3.5. Policy Context Propagation

After successful authorization of an End-User access request to a resource, Resource Servers can choose to extract a minimal set of Policy information that provides context for the request made within the Platform. This allows the Resource Server to perform fine-grained access control on its own, down to the actual contents of the response.

|

Example

An End-User might execute a GetCapabilities request which the Resource Server wants to adapt to the visibility rights associated to each Process. The Resource Server can choose to pull Policy Context from the original request and use it to filter out specific processes from the resulting list. |

4.1.4. Accounting and Billing

The platform accounts for resource use both within the platform and in other platforms via federation. In addition, several inter-platform billing models are supported as defined in the use cases, [EOEPCA-UC]. A number of principles must first be established:

-

Actions are performed within the context of a 'billing identity', which may be different to the user’s identity.

-

Charges are the result of discrete 'billing events' occuring within a particular 'billing window'. Pricing must consider all events within the window, not events individually (to support, for example, tiered pricing).

-

Different platforms may follow completely different pricing and billing models. The architecture and federation messaging cannot assume any particular method of calculation or for describing prices.

-

Only the platform hosting it can accurately price the use of a licensed Resource or compute resource.

-

Costs may be estimated but the estimate is not required to be binding. Federated access can never rely on binding estimates.

-

Debts can only be created where there is a direct contractual relationship and opportunity for credit control. A user can never owe money directly to another platform unless he has an account with it.

-

A platform prices in a single currency (but could choose to allow a user to settle a bill with another currency). Different federated platforms may choose different currencies.

Billing Identities

A billing identity is a user identity for a user who has established a billing relationship with the platform. A billing user may delegate chargeable service access to other users within the system, permitting that user to use resources billed to the billing identity.

Individual platforms may choose models with varying complexity. For example, one platform may require that the billing and user identity are always the same, whilst another may permit a user working on multiple cross-organizational projects to choose the billing identity to use. Identities may be related to organizations, projects, etc, for access control and credit control purposes - but these relationships are not required by the architecture.

As required by their purpose, cross-platform messaging will include both the user id and the relevant billing identity.

|

Combined usage of user and billing identities

Both the billing and user identities, and other information such as the location of each one and the type of organization involved, may be relevant to determining prices. This is because the place of supply for VAT purposes must be determined, plus any discounts for, for example, academic use. Note that 'location' means more than 'country' (eg, the Canary Islands have lower VAT than Madrid). Also, some organizations may be treated differently such as international organizations exempt from all tax. |

Commercially Licensed Resources

Users may publish Resources which are licensed to others on commercial terms and use the platform to collect payments. There are two types of charges which require support within User Management: time-based and volume-based.

Time-based charges occur when a user requests a licence which costs a fixed price for a fixed time (or is permanent), regardless of the accesses made to the Resource. The Data Access Services and Execution Management Services determine when such a licence is required and the licence manager manages the process for buying one, including emitting a billing event. This typically will happen in advance of a request. The licence manager may give the billing service an opportunity to reject the request, if applicable to the platform’s billing model.

Volume-based charges occur as access to a licensed Resource proceeds or completes (for example, on first access to a specific satellite image or for each input image passed to a commercial machine learning model). Again, the licence manager reports these as billing events when a licence requirements check is made.

Pricing is specified by the Licenser (in a particular form supported by the platform) and stored by the pricing engine (quantity to price mapping) and licence manager (method for determining which licences and 'quantity'). The licence manager must emit three billing events when license grants are bought: a charge to the user, a credit to the Licenser and a charge to the Licenser representing the platform fee for handling payment processing.

Budgets

|

Work In Progress

The Budget aspects of the User Management Task are not addressed on this document for the time being. |

Inter-platform Payments

Three different models for federated availability of commercial services are supported, two of which require support from the accounting and billing mechanisms of the platforms involved. This support comes in the form of inter-platform payments, allowing users to pay for executions or Resource licences which are located elsewhere in the federation.

Note that three platforms may be involved in providing a chargeable federated commercial service:

-

The home platform where the user is registered and the action is initiated.

-

The host platform where the licenced Resource or chargeable compute resource is located.

-

The compute platform where processing occurs.

Consider, for example, a processing chain invoked on the home platform which invokes a processing service running on the compute platform using a software container published by a Licenser registered on the host platform. Frequently, two or more of these platforms are the same. However, even if all three are the same the platform may wish to use the same process where payments to a Licenser are involved.

Inter-platform Payment Model and Process

An inter-platform payment supports a User of one platform paying for a service provided by either another platform or by a User of another platform. It’s important to repeat that a debt is only ever created between two entities which have a legal relationship and an opportunity for credit control. This requires that inter-platform payments involve two or three separate debts being: one from User to home platform, one from home platform to the host platform and the third from the host platform to the User providing the service (if any). The process must also cope with the price not being known in advance in all cases - processing costs in particular may be unpredictable. To support this, the following stages are involved:

-

Authorization stage: This provides an opportunity for credit control decisions in advance of debts being incurred. This establishes a maximum amount of debt before a new authorization must be sought or the operation aborted but will not necessarily ever be owed in full. Both home and host platform must agree to authorize an inter-platform payment (the host platform may reject if it doesn’t believe the home platform will pay). The home platform may 'hold' some account credit from its user or authorize a credit card payment if appropriate in its billing model.

-

Clearing stage: This occurs after a debt is legally incurred, such as after (some of) the computation or data access is completed. The platform on which the service is provided, the host platform, reports to the home platform how much debt has actually been incurred. It may happen in stages - for example a large authorization may occur, followed by the clearing of smaller amounts after every hour of compute time. It cannot exceed the amount authorized.

-

Settlement stage: This involves a batch of multiple payments, such as a day or a month of payments. The platforms with payment processing contracts in place must reconcile their records and calculate a net amount owed (potentially in multiple currencies). They must then settle the net debt by making a payment using the banking system.

Two different commercial models are supported: bilateral clearing and central clearing. In bilateral clearing every platform must negotiate a contract with every other platform (or as far as possible - incomplete coverage will limit what users can do). This has certain commercial downsides, such as a need for every-pair auditing for accurate reporting of resource use and a danger of incumbents excluding new entrants. In central clearing a clearing house must exist and all platforms form a relationship with the clearing house. The clearing house technical functionality is not further explored here, nor is the management of counterparty risk. The messaging and process is intended to be the same in both models.

Where inter-platform payments are used the host platform is acting a subcontractor to the home platform. Should the host platform fail to perform, a dispute resolution process must be used. This is considered out of scope of the architecture, except that payments may be marked as disputed, refunded or charged back. This must be accounted for during reconciliation between platforms.

Federated Commercial Services Without Inter-platform Payments: Direct Payments

If inter-platform payments are not available, for example because two platforms do not have a payment agreement, it may still be possible to provide services across multiple platforms providing the user has an account and billing relationship with each one directly. This requires that both platforms recognize both the user and the selected billing identity, and that the billing user has delegated access to the user in both platforms.

To handle direct payments the user must authorize the home platform to act on its behalf when submitting requests to the host platform. This is done using OAuth. The home platform must redirect the user to the host platform which then returns an authorization token to the home platform. Federated platforms must run an OAuth endpoint for this purpose and certain restrictions must be put on its functioning (for example on refresh token lifetime).

Other system components must then use an access token when making requests to the host platform. The host platform should still report costs and identifiers to the home platform, which must be passed to the Billing Service to be recorded. This aids dispute resolution and the reporting of total costs for particular requests.

Estimating Inter-platform Costs

|

Work In Progress

Estimation of Inter-platform costs is not addressed in this document for the time being. |

Relationship to System Components

The Billing Service handles inter-platform payments and supports direct payments in response to requests from other components, such as the EMS. The direct payment model is very different to inter-platform payments but knowledge of the distinction and when each should be used should be isolated in the Billing Service as much as possible.

To support this for volume-based charges, interaction between other system components and the Billing Service proceeds as follows:

-

Prior to federated resource use, a component must make a request to the Billing Service with the estimated cost (or a fixed value if not available) and the identity of the host platform. It must also include the transaction ID for the user action which resulted in the payment.

-

The Billing Service determines what kind of payment handling is available, if any. It returns success or failure and, optionally, an OAuth URL to authorize direct payment.

-

The component proceeds with its activity, incurring charges. The activity occurs on the compute platform, which may also be the home or host platform.

-

The compute platform seeks authorization from the host platform before charges are incurred. The host platform checks that an authorized payment exist (directly between the home and host platform). If the charge is for compute resources then these are the same platform and may be a no-op, but this may not be the case for computation using licensed data or software.

-

If the compute platform seeks access from a host platform which has no authorized payment in place then it must report this to the home platform. The home platform may then request authorization or abort the processing. This may happen if the home platform cannot fully predict the accesses made during computation.

-

The compute platform computes, incurring charges. The compute platform may also access the host platform to retrieve data or software but this may also be cached. The resource use is reported by the compute platform to the host platform - for example, a list of images accessed or processed. This happens in multiple chunks when charges are incurred over time.

-

The host platform clears pieces of the original inter-platform authorization by sending a clearing request directly to the home platform. Note that only the host platform is considered authoritative for calculating the true cost (which is returned here).

-

If the original authorization is exhausted then the home platform may pre-emptively extend it by creating a new payment (with the same transaction ID). Otherwise the host platform must reply to a charge report from the compute platform with a response prohibiting further charges.

-

On receiving such a message the compute platform must suspend further processing and forward the response to the home platform. The home platform must then either seek a new authorization or send an abort message to the compute platform.

For time-based licences the flow can be simpler:

-

The component requests payment authorization from the Billing Service, specifying an exact price.

-

The component communicates with the host platform to acquire the licence.

-

The host platform sends a payment clearing message to the home platform Billing Service to clear the entire authorization.

Payment Processing Systems

Payment processing itself, in particular card payment processing, may be initiated by the Billing Service but should be strictly separate from it. [PCI-DSS] imposes many onerous requirements not just on the software and hardware used for payment processing, but also on the wider organization and its processes (for example, for formal change reviews and code reviews, the use of specialist cryptographic hardware security modules, the separation of duties between staff and requirements in recruitment and training). For these reasons some implementers will need to avoid card processing within the system entirely and redirect users to externally hosted payment servers. This may constrain them to an account credit-based model whilst other providers may be able to initiate an authorization or full payment on-demand.

4.1.5. User Management

The User Profile is a system resource that maintains a set of data for each user including:

-

User details

-

Terms and conditions accepted by the user

-

Licence keys held by the user

-

User API key management

The User Profile for a given user is tied to the unique identifier provided by their Home-IdP through the authentication process. A front-end solution is put in place to facilitate User Profile edition and the ability yo exercise GDPR related rights (i.e. right to be forgotten).

4.1.6. Licence and T&C Management

A licence manager must determine whether or not licence requirements permit certain actions by a certain user. For freely available resources simple acceptance of the licence may be necessary. For commercially licensed resources it may be much more complicated. For example, a licence may have been bought for non-educational use by up to 5 users for satellite images with a certain resolution and area, with an extra charge made for images less than 15 degrees off nadir. Alternatively, a commercially licensed processing service may be charged by the CPU-hour or user-month. This is handled by the pricing and billing services, but acceptance of these terms must still be made first.

Some concepts applicable within the licence manager must be established:

-

A licence consist of the legal text itself, a name and version, a description of pricing where appropiate and other metadata.

-

A licence terms acceptance is the acceptance by a particular user (and organization) of the licence terms and conditions.

-

A licence grant grants a users access to particular parts of a resource or for particular purposes. This is only applicable to commercial licensed resources. A licence grant is signed by the licenser.

-

The licence manager does not know which resources require which licences. It only knows data about identified licences and about which users have which acceptances and grants.

The licence manager does not formally know how to calculate the price of a commercial licence grant. Instead, it produces an identifier for a particular type of grant and a quantity. The billing engine turns this in to a price, which may involve applying any user-specific or volume-based discounts. The quantity may be in, for example, square kilometres. Alternatively, the licences may be priced at €1/unit, effectively transferring responsibility to the licence manager’s configuration.

Note that licencers must ensure that their licences are uniquely identified across the whole federation. That is, if they use the same licence on multiple platforms they must give it the same ID and must not otherwise reuse IDs.

Licence Requirement Checks

At the request planning stage the EMS determines the licences required (as far as is possible in advance). This results in a list of licence requirement specifications. These may vary in complexity, from simply identifying a dataset to specifying an AoI, ToI and additional attributes, depending on platform support and on any knowledge the EMS has about which request fields are licence-relevant. The licence manager, however, only performs matching of these against rules or configuration using no or limited knowledge of the specific meaning of fields.

On receiving licence requirement specifications, the licence manager must compare them against the licences and grants possessed by the user and determine what licences, if any, must be obtained by the user before the action is permitted. On failure, the result should contain something the user can act on, such as a URL for viewing and agreeing to dataset terms or for buying licences. On success, the licence manager may return information on which fields were used so that the EMS can avoid repeated checks.

The licence manager is also able to determine when additional commercial licence grants should be added (and charged for) automatically. The user must have previously agreed to the license terms and pricing. When a new licence grant is added it should record it and issue a billing event.

Licence grants may also be managed by an external service operated by the licenser. This communication is managed entirely by the licence manager.

Processing may cross platform boundaries within the federation. A platform executing processing or supplying a resource must be able to determine that the processing is running in a context in which any required licences are available. To support this, the context must include enough information to identify the licence manager of the originating platform. When a licence manager receives a licence requirement specification which can’t be satisfied locally it should use this endpoint to perform a licence requirement check. The originating platform may fail this request, may accept it based on existing data (returning signed licence grants if appropriate) or may perform an automated licence grant acquisition. The host/compute platform may then store these licence grants against the user ID for use in future checks.

Note that cross-platform executions may involve running, for example, a processing chain initiated from platform A with a component involving a commercially licenced compute service from platform B running on platform C. In these cases platform B may check that the user has accepted its platform acceptable use policy by contacting platform A, fetch the compute service from platform C which will then also directly contact platform A to ensure that licences are available before returning the container ot platform B.

|

Work In Progress

Sequence diagrams that represent these interactions will be provided within this section. |

Licence Acquisition

Unless managed by an external service, users must be able to view and accept terms and purchase licence grants using the licence manager. For licences where no licence grants must be bought this is very simple - for example, the licence manager may provide APIs enabling the UI to fetch licence text and submit acceptance. This can be done from a resource information display page or following a refused request.

Where a licence grant must be bought the flow for the user is managed by other components. A user interface may be used to choose licence attributes or particular subsets of data, for example, or a user may have the option to allow automatic purchases as data is accessed. This licence manager must support this functionality in the following ways:

-

A human-readable description of the pricing model and prices is included with the licence metadata. This should be displayed to the user.

-

The licence manager can accept a licence requirement specification and turn it in to either a product code and quantity (which the caller can then pass to the pricing engine) or information on which additional fields are required. The field names, types and UI information is supplied by other services as part of the resource definition.

-

The licence manager can accept a command to buy a specified licence. It will then emit a billing event. This may happen synchronously or asynchronously depending on the needs of the platform’s billing model.

When federated access is involved, such as when a processing chain runs some components on another platform or when data or processing services are transferred to run locally, a user may need to accept licence terms or acquire a licence grant for a resource which is not published via the home platform. This must always be initiated from the home platform, either in advance of the execution or in response to an event returned by a host platform. For terms acceptance the licence manager must contact the host platform and transfer the necessary T&C data. For a (commercial) licence grant, the licence manager must ask the billing manager to authorize a payment to the host platform and then make a request to the host licence manager to buy the licence (specifying the payment ID). The host licence manager must verify the price before asking its own billing manager to clear the payment. It should then record the licence grant as well as returning it to the home platform.

Licence Administration

Resource owners must be able to configure licences. The UIs and APIs allowing them to do this must interact with the licence manager (and the pricing engine) to configure their licences. This includes only the licences themselves - assignment of licence requirements to resources is out of the licence manager’s scope.

Porting Licences Within the Federation

In some cases users may have multiple home platforms, initiating some workloads from different locations. To ensure that users can use their licences for workloads initiated across all locations licence 'porting' may be used.

A user 'ports' a licence from one platform to another by using OAuth to authorize the licence manager on the local platform to access his licences on another. This is only permitted if the licences have been marked as 'portable' by the licenser.

This mechanism may be used for two purposes. In the first, a publisher has published his Resource in both platforms (which may be done to permit lower processing latencies, lower payment processing costs or the use of proprietary features). The platform receiving the licence must verify its signature using the licenser’s public key before accepting it. In the second case the Resource is not available on the receiving platform but may still be used in cross-platform workflows (including the case when a processing service is transferred from a remote host platform to execute locally).

4.2. Architecture Overview

|

Work In Progress

This section focuses on HOW the functionality is provisioned, and how the building blocks are connected to each other.

|

4.2.1. Login Service

The Login Service is an OIDC Provider that provides a ‘Login With’ service that allows the end-user to select their Identity Provider for purposes of authentication.

The Login Service is designed to support the onward forwarding of the authentication request through external identity services, which should be expected to include:

-

EduGain

-

GitHub

-

Google

-

Twitter

-

Facebook

-

LinkedIn

-

Others (to be defined)

The Login Service must establish itself as a client (Relying Party) of all supported external IdPs, with appropriate trust relationships and support for their authentication flows.

The primary endpoints required to support the OIDC flows are as follows (these endpoints are taken, by example, from OKTA OIDC discovery metadata, https://micah.okta.com/oauth2/aus2yrcz7aMrmDAKZ1t7/.well-known/openid-configuration):

- authorization_endpoint (/authorize)

-

To initiate the authentication, and to return the access tokens / code grant (depending on flow).

- token_endpoint (/token)

-

To exchange the code grant for the access tokens.

- userinfo_endpoint (/userinfo)

-

To obtain the user information ID token in accordance with the scopes requested in the authorization request.

- jwks_uri (/keys)

-

To obtain signing keys for Token validation purposes.

- end_session_endpoint (/logout)

-

To logout the user from the Login Service, i.e. clear session cookies etc. Although, given that the actual IdP is externalised from the Login Service, it would remain the case that any session cookies maintained by the external IdP would still be in place for a future authentication flow.

- introspection_endpoint (/introspect)

-

Used by clients to verify access tokens.

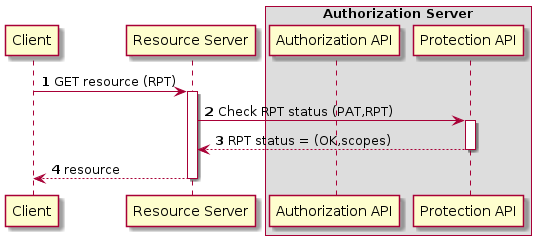

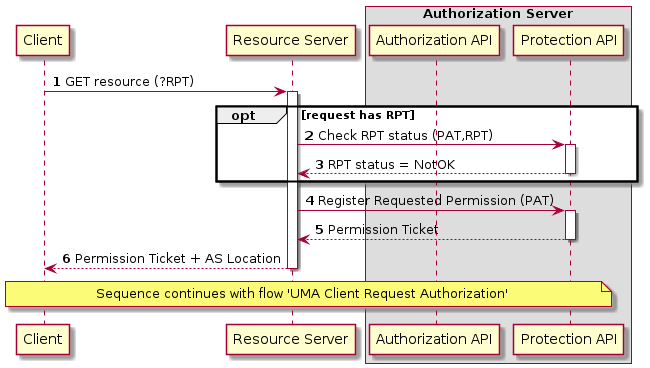

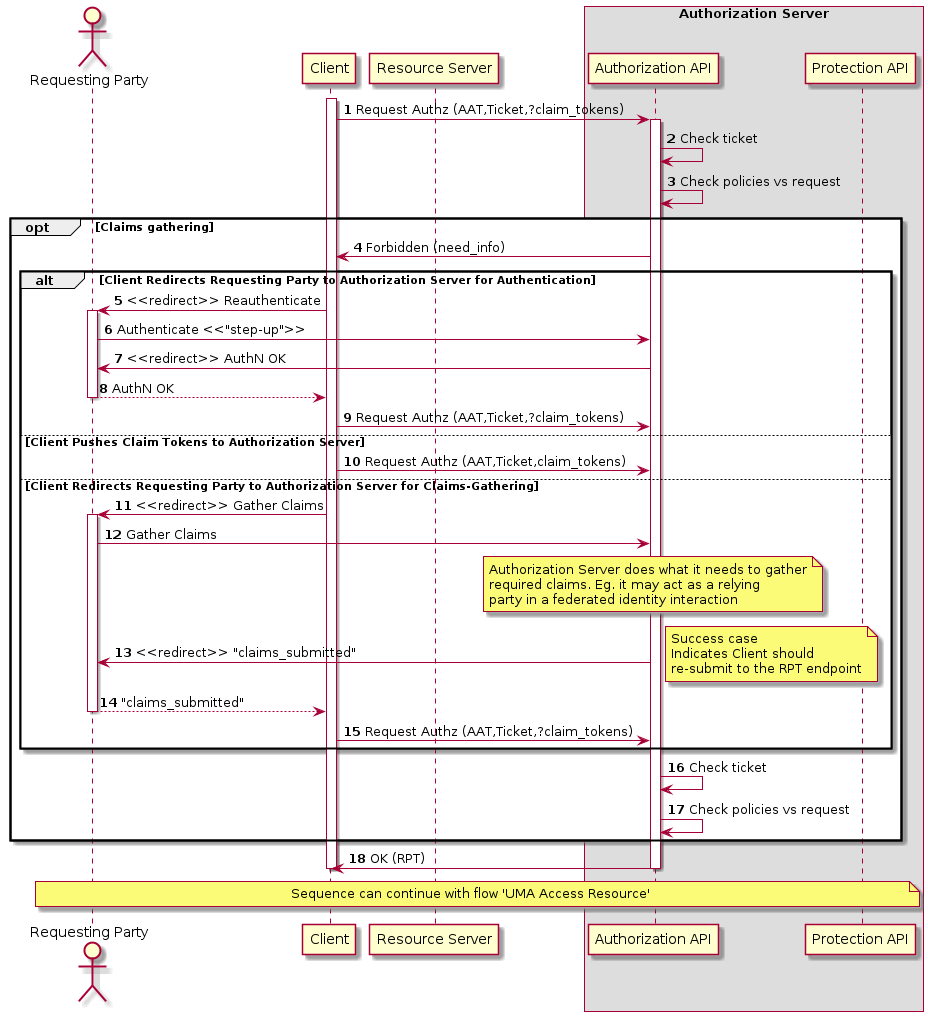

- revocation_endpoint (/revoke)

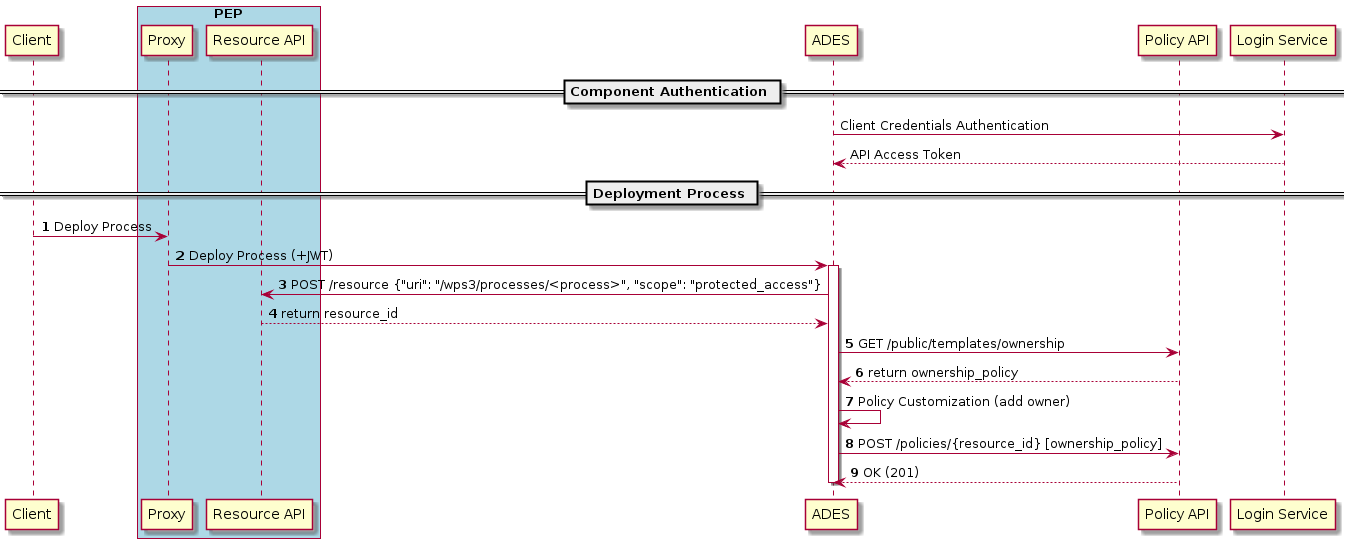

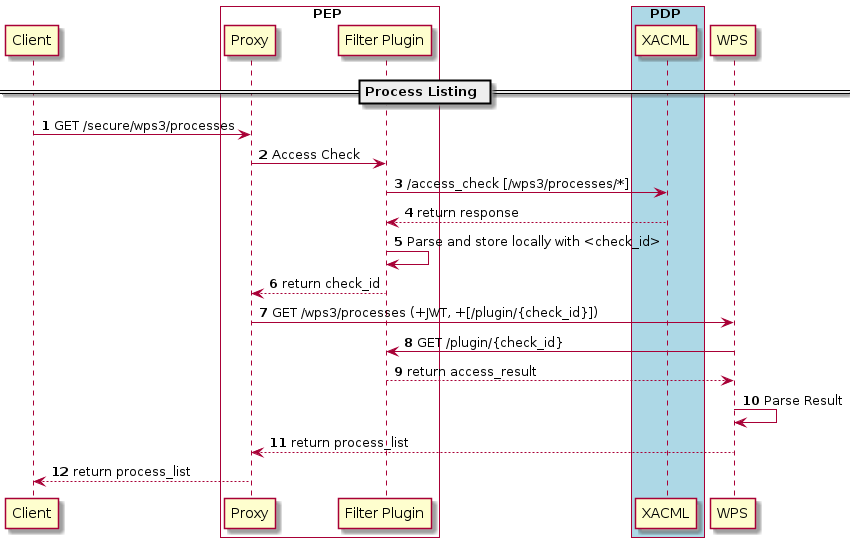

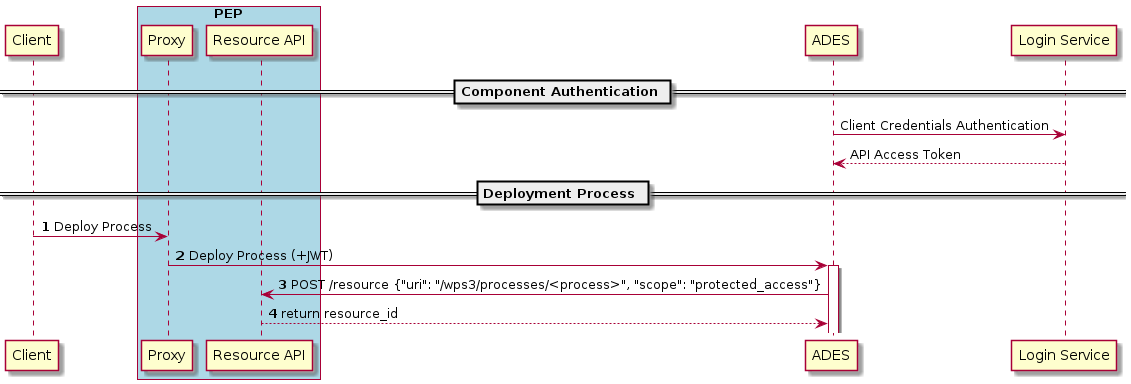

-